We’re thrilled to announce our latest breakthrough research article, Modeling Spaced Repetition with LSTMs, created in cooperation between the SuperMemo team and scientists from the Faculty of Mathematics and Computer Science of the Adam Mickiewicz University in Poznań. It explores the potential of Long Short-Term Memory (LSTM) neural networks in the realm of spaced repetition.

As SuperMemo, we started the research in computer supported repetition optimization (spaced repetition) and published the world’s first computer application using this method. By today, it has over 30 years of history and development behind it. We are proud not only to have initiated the trend that is now followed by all leading educational apps, but also to present another milestone in the development of this method. It concerns the use of machine learning to effectively predict memory retention and provide optimum learning guidance.

About our research – AI in SuperMemo

In the research we conducted an extensive review of earlier work on the subject, testing and comparing different methods to select the most effective one. By leveraging our expert knowledge in spaced repetition worked out over the years as well as massive learning data gathered in the www.SuperMemo.com ecosystem, we were able to accelerate the learning process of our LSTM model, making it even more effective. In a sense, the machine learning-based SuperMemo algorithm is similar to our expert one but utilizes different optimization methods to reach the same goals.

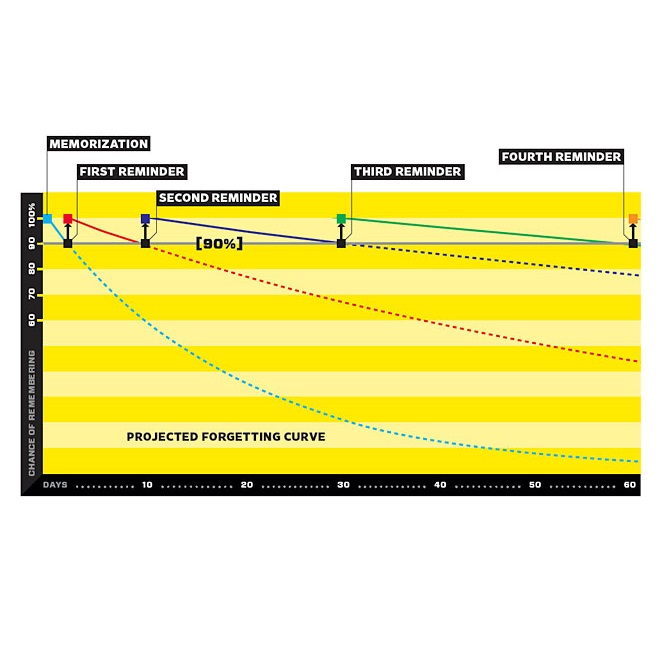

Our LSTM model is designed to predict the probability of remembering particular items by a user and to plan optimal review intervals, ultimately minimizing time spent on reviews while maintaining a high level of knowledge retention. We compared the model built this way with other machine learning approaches, such as logistic regression, feedforward neural networks or half-life regression, and found that our LSTM model consistently outperformed the others. This demonstrates the value of LSTM neural networks in the field of spaced repetition and their ability to improve the effectiveness of SuperMemo.

By incorporating long-term memory theory into LSTM models, we can provide an effective ML alternative to the so far unbeaten expert methods. Moreover, the machine learning based SuperMemo algorithm is open for further development, including the integration of multiple new parameters. For example we may incorporate aspects like time of day, sleep cycle, level of stress or fatigue, content dependencies or language correlations into the computation. We may research the influence of these parameters on learning, continually refining and individualizing our methods.

Conference in Prague

We are excited to present this article at CSEDU, the International Conference on Computer Supported Education, in Prague on April 21st, 2023. As we continue to revolutionize learning and personal development, we believe that these findings will contribute significantly to the ongoing refinement and improvement of SuperMemo.

Our journey in perfecting spaced repetition algorithms has always been about providing the best possible learning experience for you. With this new research, we aim to continue our tradition of innovation and excellence, making SuperMemo even more effective and adaptive to your learning needs.

The SuperMemo Team