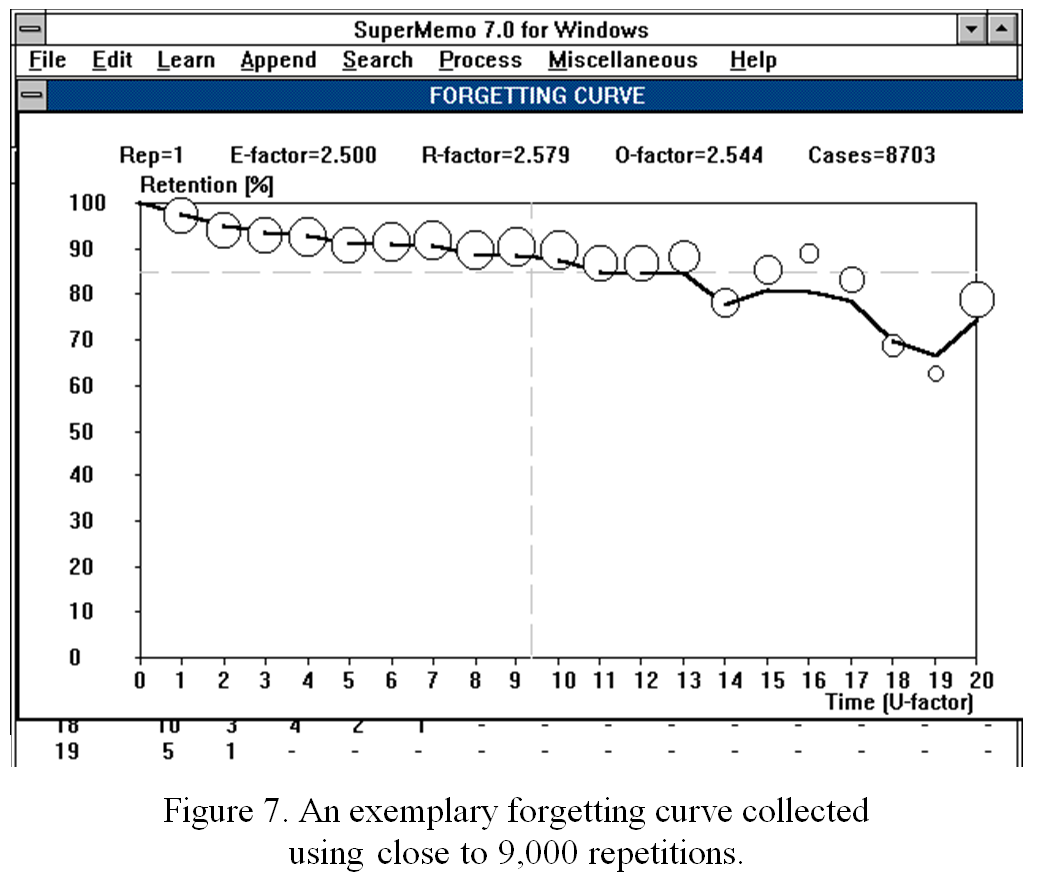

Dr Piotr Wozniak, June 2018

Contents

- Introduction

- 1985: Birth of SuperMemo

- 1986: First steps of SuperMemo

- 1987: SuperMemo 1.0 for DOS

- 1988: Two component of memory

- 1989: SuperMemo adapts to user memory

- 1990: Universal formula for memory

- 1991: Employing forgetting curves

- 1994: Exponential nature of forgetting

- 1995: Hypermedia SuperMemo

- 1997: Employing neural networks

- 1999: Choosing the name: “spaced repetition”

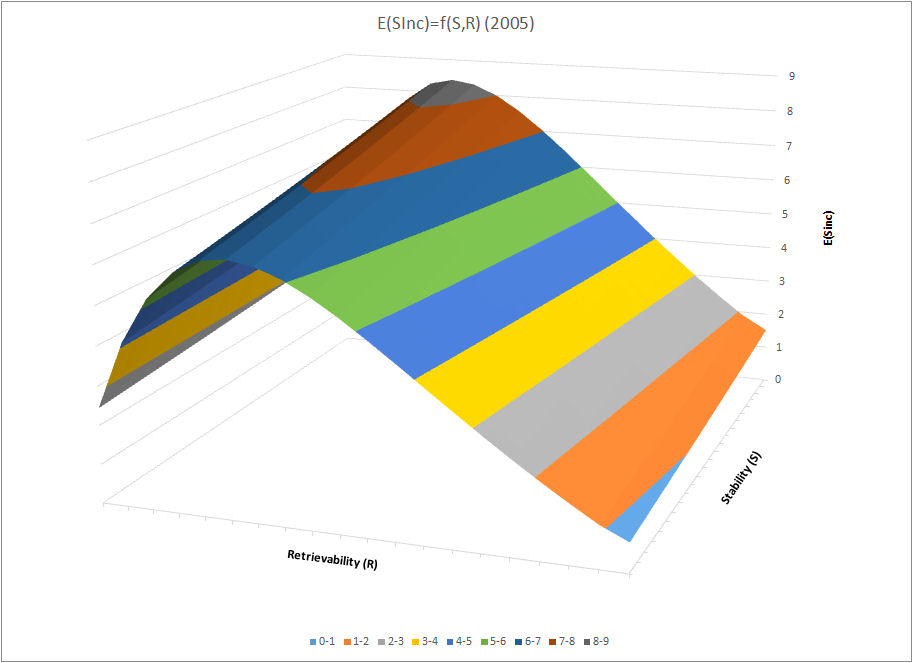

- 2005: Stability increase function

- 2014: Algorithm SM-17

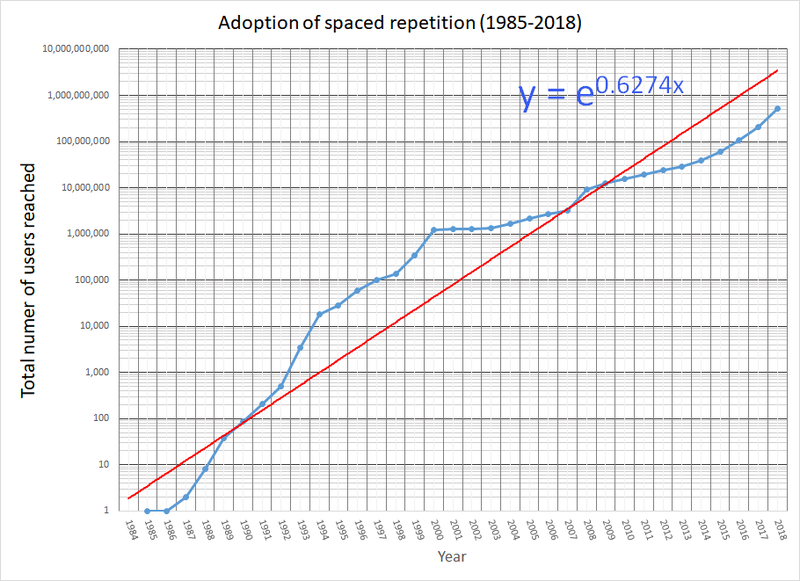

- Exponential adoption of spaced repetition

- Summary of memory research

- The anatomy of failure and success

Introduction

True history

The popular history of spaced repetition is full of myths and falsehoods. This text is to tell you the true story. The problem with spaced repetition is that it became too popular for its own effective replication. Like a fast mutating virus it keeps jumping from application to application, and tells its own story while accumulating errors on the way.

Who invented spaced repetition?

This is the story of how I solved the problem of forgetting. I figured out how to learn efficiently. Modesty is a waste of time, therefore I will add that I think I actually know how to significantly amplify human intelligence. In short: memory underlies knowledge which underlies intelligence. If we can control what we store in memory and what we forget, we can control our problem solving capacity. In a very similar way, we can also amplify artificial intelligence. Its a great relief to be able to type in those proud words after many years of a gag order imposed by commercial considerations.

Back in the early 1990s, I thought I knew how to turn education systems around the world upside down and make them work for all students. However, any major change requires a cultural paradigm shift. It is not enough for a poor student from a poor communist country to announce the potential for a change. I did that, in my Master’s Thesis, but I found little interest in my ideas. Even my own family was dismissive. Luckily, I met a few smart friends at my university who declared they would use my ideas to set up a business. Like Microsoft changed the world of personal computing, we would change the way people learn. We owned a powerful learning tool: SuperMemo. However, for SuperMemo to conquer the world it had to ditch its roots for a while. To convince others, SuperMemo had to be a product of pure science. It could not have just been an idea conceived by a humble student.

To root SuperMemo in science, we made a major effort to publish our ideas in a peer-review journal, adopted a little known scientific term of ” spaced repetition ” and set our learning technology in a context of learning theory and the history of research in psychology. I am very skeptical of schools, certificates, and titles. However, I still went as far as to earn a PhD in economics of learning, to add respectability to my words.

Today, when spaced repetition is finally showing up in hundreds of respectable learning tools, applications, and services, we can finally stake the claim and plant the flag at the summit. Usership is going into hundreds of millions.

If you read SuperMemopedia here you may conclude that “Nobody should ever take credit for discovering spaced repetition”. I beg to disagree. In this text I will claim the full credit for the discovery, and some solid credit for the dissemination of the idea. My contribution to the latter is waning thanks to the power of the idea itself and a growing circle of people involved in the concept (well beyond our company).

Perpetuating myths

It is Krzysztof Biedalak (CEO), who got least patience with fake news in reference to spaced repetition. I will then credit this particular text and the effort in mythbusting to his resolution to keep the history straight. SuperMemo for DOS was born 30 years ago (1987). Let’s pay some tribute.

If you believe that Ebbinghaus invented spaced repetition in 1885, I apologize. When compiling the history of SuperMemo, we put the name of the venerable German psychologist at the top of the chronological list and the myth was born. Ebbinghaus never worked over spaced repetition.

Writing about history of spaced repetition is not easy. Each time we do it, we generate more myths through distortions and misunderstandings. Let’s then make it clear and emphatic. There has been a great deal of memory research before SuperMemo. However, each time I give prolific credit, keep in mind the words of Biedalak:

If SuperMemo is a space shuttle, we need to acknowledge prior work done on bicycles. In the meantime, our competition is busy trying to replicate our shuttle, but the efforts are reminiscent of the Soviet Buran program. Buran has at least made one space flight. It was unmanned

This texts is to put the facts straight, and openly disclose the early steps of spaced repetition. This is a fun foray into the past that brings me a particular delight with the sense of “mission accomplished”. Now that we can call our effort a global success, there is no need to make it more respectable than it really is. No need to make it more scientific, more historic, or more certified.

Spaced repetition is here and it here to stay. We did it!

Credits

The list of contributors to the idea of spaced repetition is too long to include in this short article. Some names do not show up because I simply run out of allocated time to describe their efforts. Dr Phil Pavlik got probably most fresh ideas in the field. An array of memory researchers investigate the impact of spacing on memory. Duolingo and Quizlet are leading competitors with a powerful impact on the good promotion of the idea. I failed to list many of my fantastic teachers who inspired my thinking. The whole host of hard-working and talented people at SuperMemo World would also deserve a mention. Users of SuperMemo constantly contribute incredible suggestions that drive further progress. The reward for most impactful explanation of spaced repetition should go to Gary Wolf of Wired, but there were many more. Perhaps some other day, I will have more time to write about all those great people in detail.

1985: Birth of SuperMemo

The drive for better learning

I spent 22 long years in the education system. Old truths about schooling match my case perfectly. I never liked school, but I always liked to learn. I never let school interfere with my learning. At entry to university, after 12 years in the public school system, I still loved learning. Schooling did not destroy that love for two main reasons: (1) the system was lenient for me, and, (2) I had full freedom to learn what I like at home. In Communist Poland, I never truly experienced the toxic whip of heavy schooling. The system was negligent and I loved the ensuing freedoms.

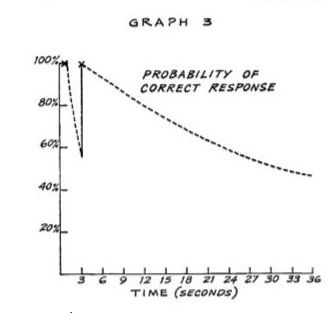

We all know that best learning comes from passion. It is powered by the learn drive. My learn drive was strong and it was mixed with a bit of frustration. The more I learned, there more I could see the power of forgetting. I could not remedy forgetting by more learning. My memory was not bad in comparison with other students, but it was clearly a leaky vessel.

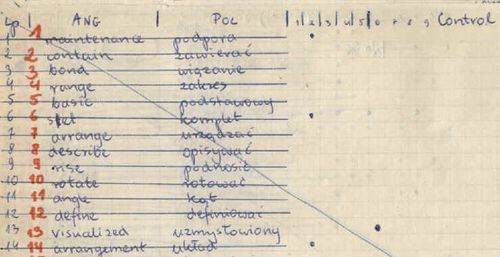

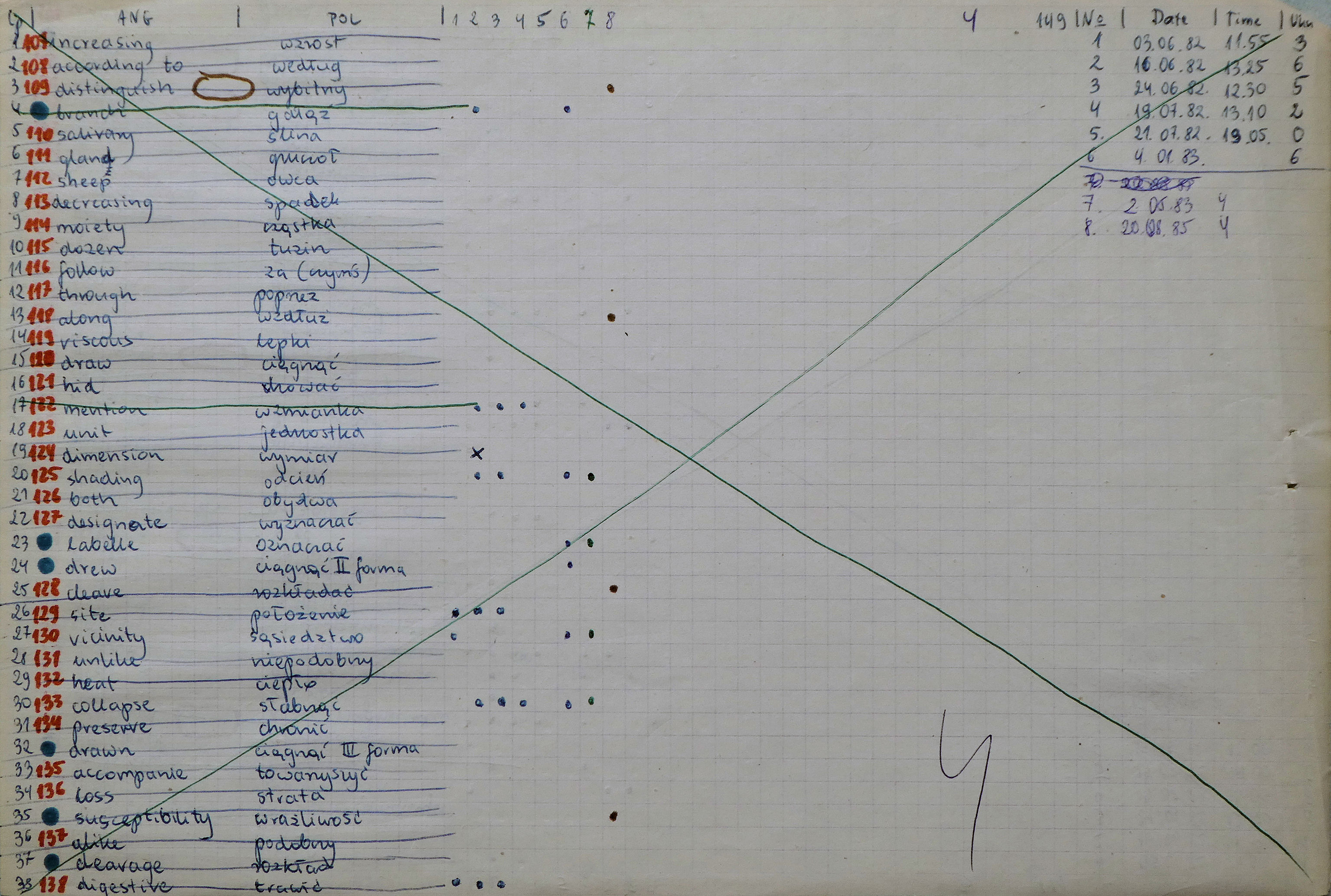

In 1982, I paid more attention to what most students discover sooner or later: testing effect. I started formulating my knowledge for active recall. I would write questions on the left side of a page and answers in a separate column to the right:

This way, I could cover the answers with a sheet of paper, and employ active recall to get a better memory effect from review. This was a slow process but the efficiency of learning increased dramatically. My notebooks from the time are described as “fast assimilation material”, which referred to the way my knowledge was written down.

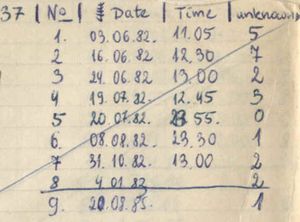

In the years 1982-1983, I kept expanding my “fast assimilation” knowledge in the areas of biochemistry and English. I would review my pages of information from time to time to reduce forgetting. My retention improved but it was only a matter of time when I would hit the wall again. The more pages I had, the less frequent the review, the more obvious the problem of leaking memory. Here is an example of a repetition history from that time:

Between June 1982 and December 1984, my English-Polish word-pairs notebook included 79 pages looking like this:

Figure: A typical page from my English-Polish words notebook started in June 1982. Word pairs would be listed on the left. Review history would be recorded on the right. Recall errors would be marked as dots in the middle

Those 79 pages would encompass a mere 2794 words. This is just a fraction of what I needed, and already quite a headache to review. Interestingly, I started learning English in an active way, i.e. using Polish-English word pairs only in 1984, i.e. with two years delay. I was simply late to discover that passive knowledge of vocabulary is ok in reading, but it is not enough to speak a language. This kind of ignorance after 6 years of schooling is a norm. Schools do a lot of drilling, but shed very little light on what makes efficient learning.

In late 1984, I decided to improve the review process and carry out an experiment that has changed my life. In the end, three decades later, I am super-proud to notice that it actually affected millions. It has opened the floodgates. We have an era of faster and better learning.

This is how this initial period was described in my Master’s Thesis in 1990:

Archive warning: Why use literal archives?

This text is part of: ” Optimization of learning ” by Piotr Wozniak (1990)

It was 1982 when I made my first observations concerning the mechanism of memory that were later used in the formulation of the SuperMemo method. As a then student of molecular biology I was overwhelmed by the amount of knowledge that was required to pass exams in mathematics, physics, chemistry, biology, etc. The problem was not in being unable to master the knowledge. Usually 2-3 days of intensive studying were enough to pack the head with data necessary to pass an exam. The frustrating point was that only an infinitesimal fraction of newly acquired wisdom could remain in memory after few months following the exam.

My first observation, obvious for every attentive student, was that one of the key elements of learning was active recall. This observation implies that passive reading of books is not sufficient if it is not followed by an attempt to recall learned facts from memory. The principle of basing the process of learning on recall will be later referred to as the active recall principle. The process of recalling is much faster and not less effective if the questions asked by the student are specific rather than general. It is because answers to general questions contain redundant information necessary to describe relations between answer subcomponents.

To illustrate the problem let us imagine an extreme situation in which a student wants to master knowledge contained in a certain textbook, and who uses only one question in the process of recall: What did you learn from the textbook? Obviously, information describing the sequence of chapters of the book would be helpful in answering the question, but it is certainly redundant for what the student really wants to know. The principle of basing the process of recall on specific questions will be later referred to as the minimum information principle . This principle appears to be justified not only because of the elimination of redundancy.

Having the principles of active recall and minimum information in mind, I created my first databases (i.e. collections of questions and answers) used in an attempt to retain the acquired knowledge in memory. At that time the databases were stored in a written form on paper. My first database was started on June 6, 1982, and was composed of pages that contained about 40 pairs of words each. The first word in a pair (interpreted as a question) was an English term, the second (interpreted as an answer) was its Polish equivalent. I will refer to these pairs as items.I repeated particular pages in the database in irregular intervals (dependent mostly on the availability of time) always recording the date of the repetition, items that were not remembered and their number. This way of keeping the acquired knowledge in memory proved sufficient for a moderate-size database on condition that the repetitions were performed frequently enough.

The birthday of spaced repetition: July 31, 1985

Intuitions

In 1984, my reasoning about memory was based on two simple intuitions that probably all students have:

- if we review something twice, we remember it better. That’s pretty obvious, isn’t it? If we review it 3 times, we probably remember it even better

- if we remember a set of notes, they will gradually disappear from memory, i.e. not all at once. This is easy to observe in life. Memories have different lifetimes

These two intuitions should make everyone wonder: how fast and how many notes we lose and when we should review next?

To this day, I am amazed that very few people ever bothered to measure that ” optimum interval“. When I measured it myself, I was sure I would find more accurate results in books on psychology. I did not.

Experiment

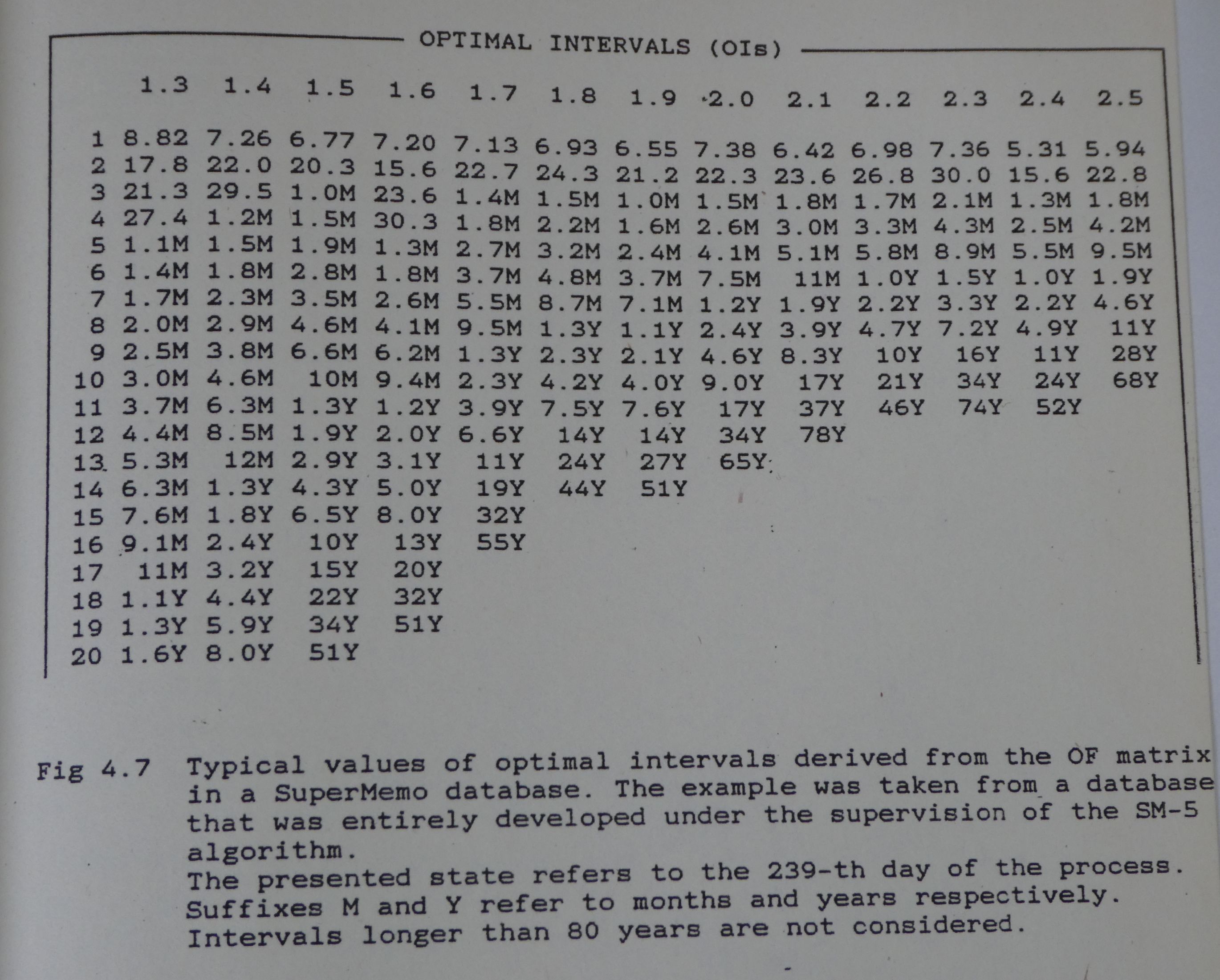

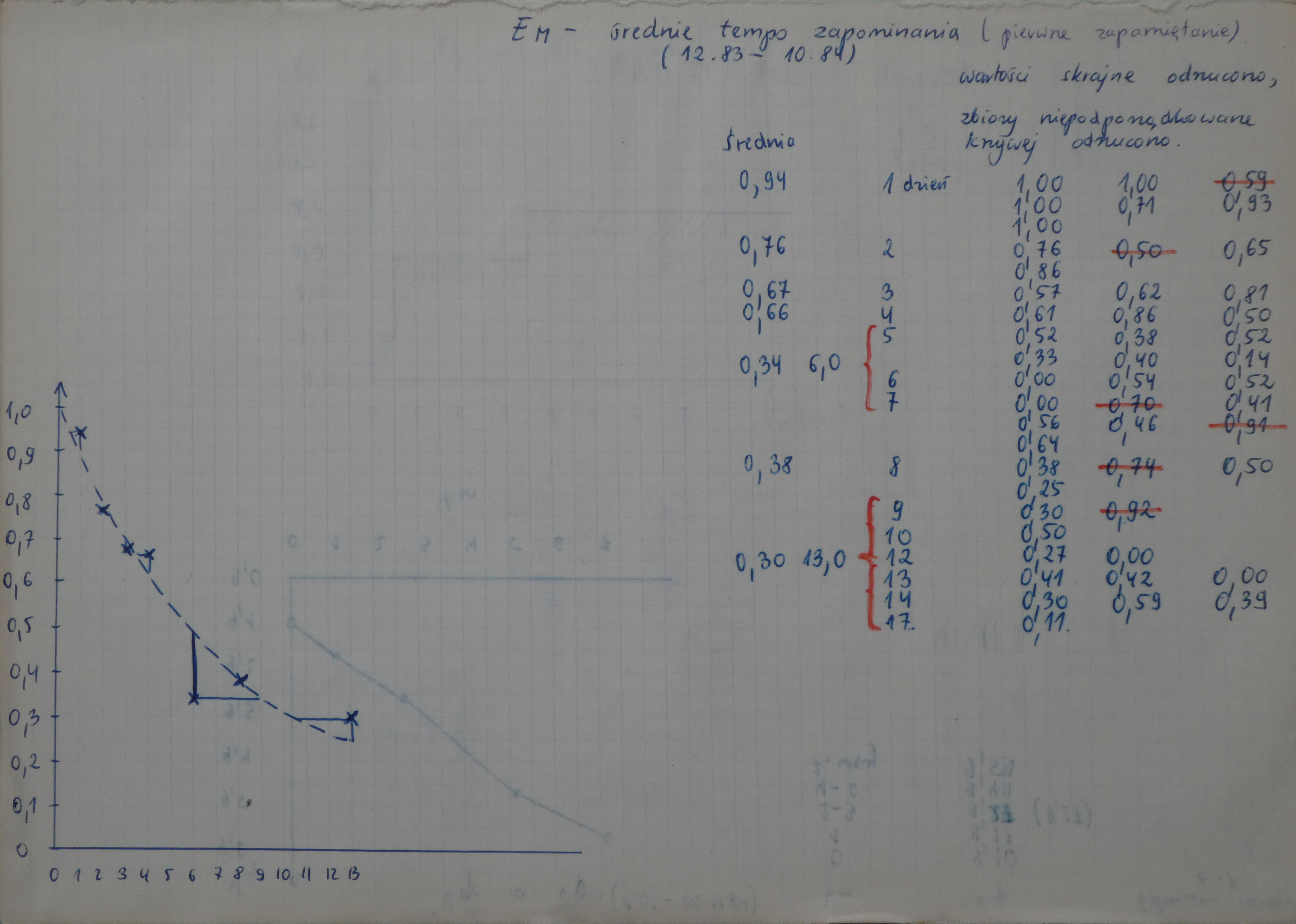

The following simple experiment led to the birth of spaced repetition. It was conducted in 1985 and first described in my Master’s Thesis in 1990. It was used to establish optimum intervals for the first 5 repetitions of pages of knowledge. Each page contained around 40 word-pairs and the optimum interval was to approximate the moment in time when roughly 5-10% of that knowledge was forgotten. Naturally, the intervals would be highly suited for that particular type of learning material and for a specific person, in this case, me. In addition, to speed things up, the measurement samples were small. Note that this was not a research project. It was not intended for publication. The goal was to just speed up my own learning. I was convinced someone else must have measured the intervals much better, but 13 years before the birth of Google, I thought measuring the intervals would be faster than digging into libraries to find better data. The experiment ended on Aug 24, 1985, which I originally named the birthday of spaced repetition. However, while writing this text in 2018, I found the original learning materials, and it seems my eagerness to learn made me formulate an outline of an algorithm and start learning human biology on Jul 31, 1985.

For that reason, I can say that the most accurate birthday of SuperMemo and computational spaced repetition was Jul 31, 1985.

By July 31, before the end of the experiment, the results seemed predictable enough. In later years, the findings of this particular experiment appeared pretty universal and could be extended to more areas of knowledge and to the whole healthy adult population. Even in 2018, the default settings of Algorithm SM-17 do not depart far from those rudimentary findings.

Spaced repetition was born on Jul 31, 1985

Here is the original description of the experiment from my Master’s Thesis with minor corrections to grammar and style. Emphasis in the text was added in 2018 to highlight important parts. If it seems boring and unreadable, compare Ebbinghaus 1885. This is the same style of writing in the area of memory. Only goals differed. Ebbinghaus tried to understand memory. 100 years later, I just wanted to learn faster:

Archive warning: Why use literal archives?

This text is part of: ” Optimization of learning ” by Piotr Wozniak (1990)

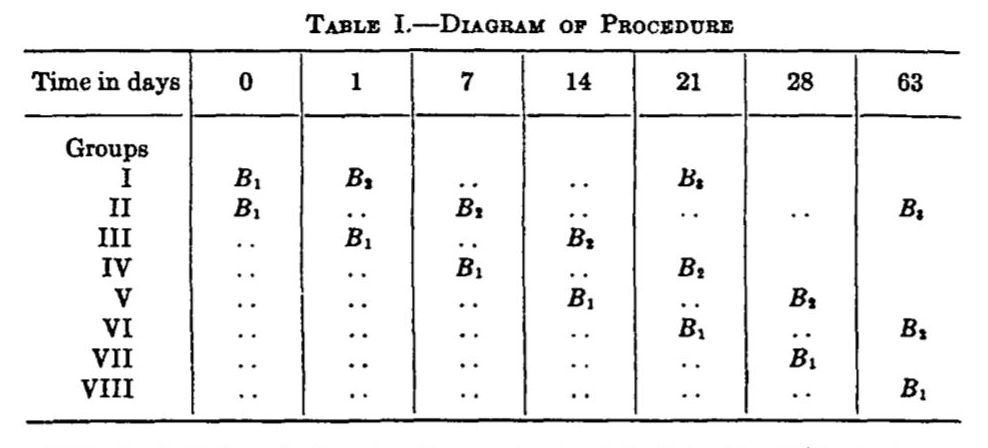

Experiment intended to approximate the length of optimum inter-repetition intervals (Feb 25, 1985 – Aug 24, 1985):

- The experiment consisted of stages A, B, C, … etc. Each of these stages was intended to calculate the second, third, fourth and further quasi-optimal inter-repetition intervals (the first interval was set to one day as it seemed the most suitable interval judging from the data collected earlier). The criterion for establishing quasi-optimal intervals was that they should be as long as possible and allow for not more than 5% loss of remembered knowledge.

- The memorized knowledge in each of the stages A, B, C, consisted of 5 pages containing about 40 items in the following form:Question: English word,Answer: its Polish equivalent.

- Each of the pages used in a given stage was memorized in a single session and repeated next day. To avoid confusion note, that in order to simplify further considerations I use the term first repetition to refer to memorization of an item or a group of items. After all, both processes, memorization and relearning, have the same form – answering questions as long as it takes for the number of errors to reach zero.

- In the stage A (Feb 25 – Mar 16), the third repetition was made in intervals 2, 4, 6, 8 and 10 days for each of the five pages respectively. The observed loss of knowledge after these repetitions was 0, 0, 0, 1, 17 percent respectively. The seven-day interval was chosen to approximate the second quasi-optimal inter-repetition interval separating the second and third repetitions.

- In the stage B (Mar 20 – Apr 13), the third repetition was made after seven-day intervals whereas the fourth repetitions followed in 6, 8, 11, 13, 16 days for each of the five pages respectively. The observed loss of knowledge amounted to 3, 0, 0, 0, 1 percent. The 16-day interval was chosen to approximate the third quasi-optimal interval. NB: it would be scientifically more valid to repeat the stage B with longer variants of the third interval because the loss of knowledge was little even after the longest of the intervals chosen; however, I was then too eager to see the results of further steps to spend time on repeating the stage B that appeared sufficiently successful (i.e. resulted in good retention)

- In the stage C (Apr 20 – Jun 21), the third repetitions were made after seven-day intervals, the fourth repetitions after 16-day intervals and the fifth repetitions after intervals of 20, 24, 28, 33 and 38 days. The observed loss of knowledge was 0, 3, 5, 3, 0 percent. The stage C was repeated for longer intervals preceding the fifth repetition (May 31 – Aug 24). The intervals and memory losses were as follows: 32-8%, 35-8%, 39-17%, 44-20%, 51-5% and 60-20%. The 35-day interval was chosen to approximate the fourth quasi-optimal interval.

It is not difficult to notice, that each of the stages of the described experiment took about twice as much time as the previous one. It could take several years to establish first ten quasi-optimal inter-repetition intervals. Indeed, I continued experiments of this sort in following years in order to gain deeper understanding of the process of optimally spaced repetitions of memorized knowledge. However, at that time, I decided to employ the findings in my day-to-day process of routine learning.

On July 31, 1985, I could already sense the outcome of the experiment. I started using SuperMemo on paper to learn human biology. That would be the best date to call for the birthday of SuperMemo.

The events of July 31, 1985

On July 31, 1985, SuperMemo was born. I had most of my data from my spaced repetition experiment available. As an eager practitioner, I did not wait for the experiment to end. I wanted to start learning as soon as possible. Having built a great deal of notes in human biology, I started converting those notes into Special Memorization Test format (SMT was the original name for SuperMemo, and spaced repetition).

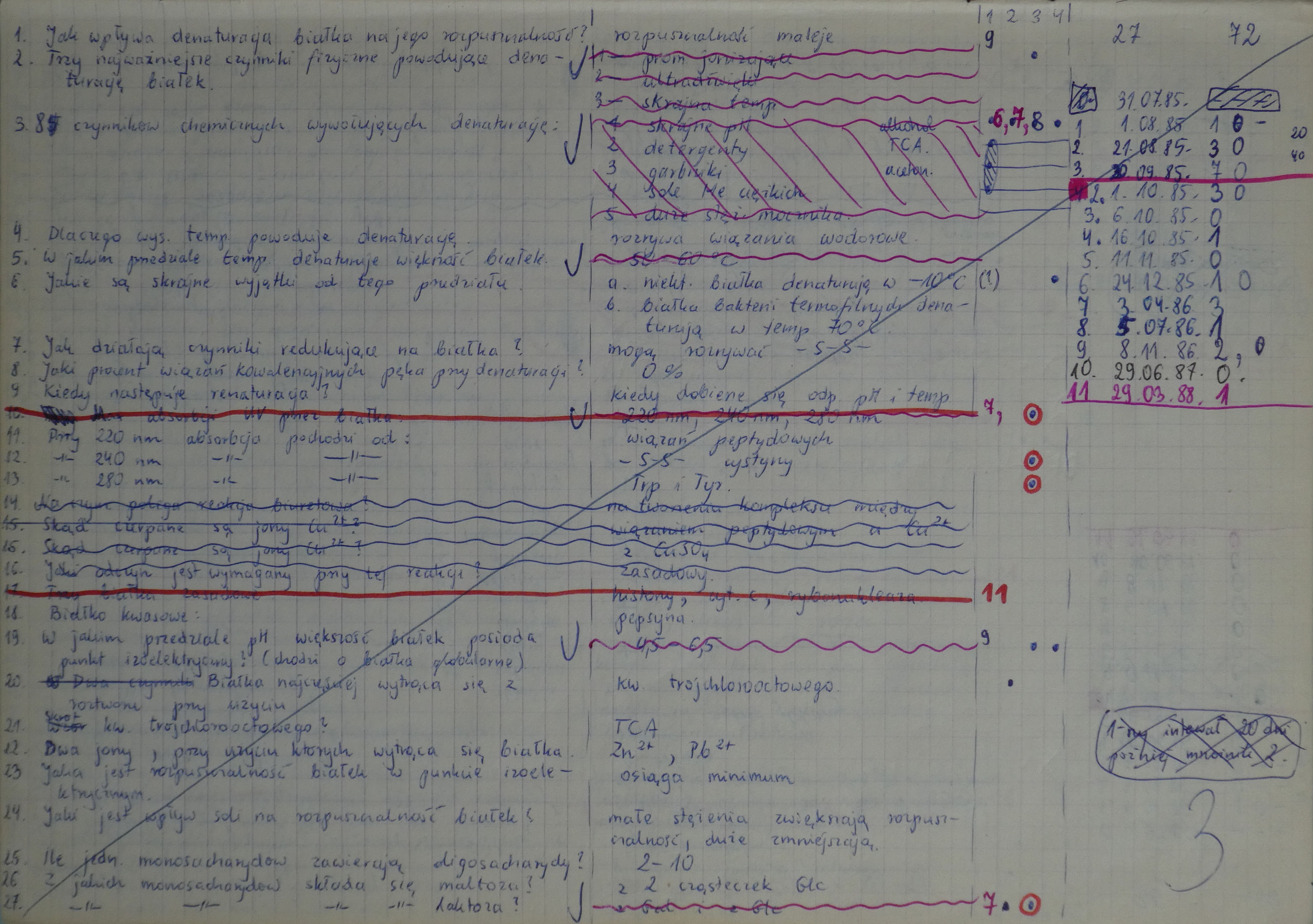

Figure: Human biology in the Special Memorization Test format started on Jul 31, 1985 (i.e. the birth of SuperMemo)

My calculations told me that, at 20 min/day, I would need 537 days to process my notes and finish the job by January 1987. I also computed that each page of the test would likely cost me 2 hours of life. Despite all the promise and speed of SuperMemo, this realization was pretty painful. The speed of learning in college is way too fast for the capacity of human memory. Now that I could learn much faster and better, I also realized I wouldn’t cover even a fraction of what I thought was possible. Schools make no sense with their volume and speed. On the same day, I found out that the Polish communist government lifted import tariffs on microcomputers. This should make it possible, at some point, to buy a computer in Poland. This opened a way to SuperMemo for DOS 2.5 years later.

Also on July 31, I noted that if vacation could last forever, I would achieve far more in learning and even more in life. School is such a waste of time. However, the threat of conscription kept me in line. I would enter a path that would make me enroll in university for another 5 years. However, most of that time was devoted to SuperMemo and I have few regrets.

My spaced repetition experiment ended on Aug 24, 1985. I also started learning English vocabulary. By that day, I managed to have most of my biochemistry material written down in pages for SuperMemo review.

Note: My Master’s Thesis mistakenly refers to Oct 1, 1985 as the day when I started learning human biology (not July 31 as seen in the picture above). Oct 1, 1985 was actually the first day of my computer science university and was otherwise unremarkable. With the start of the university, my time for learning and energy for learning were cut dramatically. Paradoxically, the start of school always seems to augur the end of good learning.

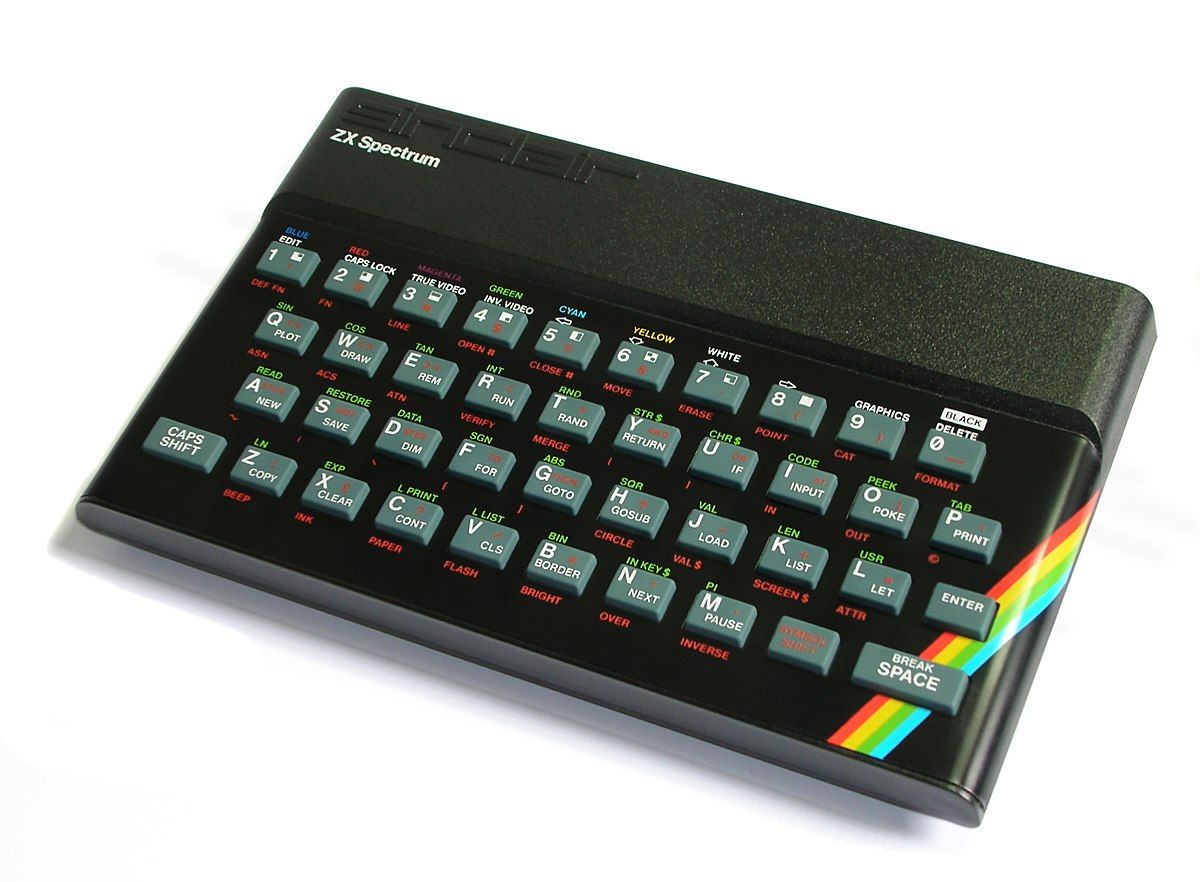

First spaced repetition algorithm: Algorithm SM-0, Aug 25, 1985

As a result of my spaced repetition experiment, I was able to formulate the first spaced repetition algorithm that required no computer. All learning had to be done on paper. I did not have a computer back in 1985. I was to get my first microcomputer, ZX Spectrum, only in 1986. SuperMemo had to wait for the first computer with a floppy disk drive (Amstrad PC 1512 in the year 1987).

I often get asked this simple question: “How can you formulate SuperMemo after an experiment that lasted 6 months? How can you predict what would happen in 20 years?”

The first experiments in reference to the length of optimum interval resulted in conclusions that made it possible to predict the most likely length of successive inter-repetition intervals without actually measuring retention beyond weeks! In short, it could be illustrated with the following reasoning. If the first months of research yielded the following optimum intervals: 1, 2, 4, 8, 16 and 32 days, you could hope with confidence that the successive intervals would increase by a factor of two.

Archive warning: Why use literal archives?

This text is part of: ” Optimization of learning ” by Piotr Wozniak (1990)

Algorithm SM-0 used in spaced repetition without a computer (Aug 25, 1985)

- Split the knowledge into smallest possible question-answer items

- Associate items into groups containing 20-40 elements. These groups are later called pages

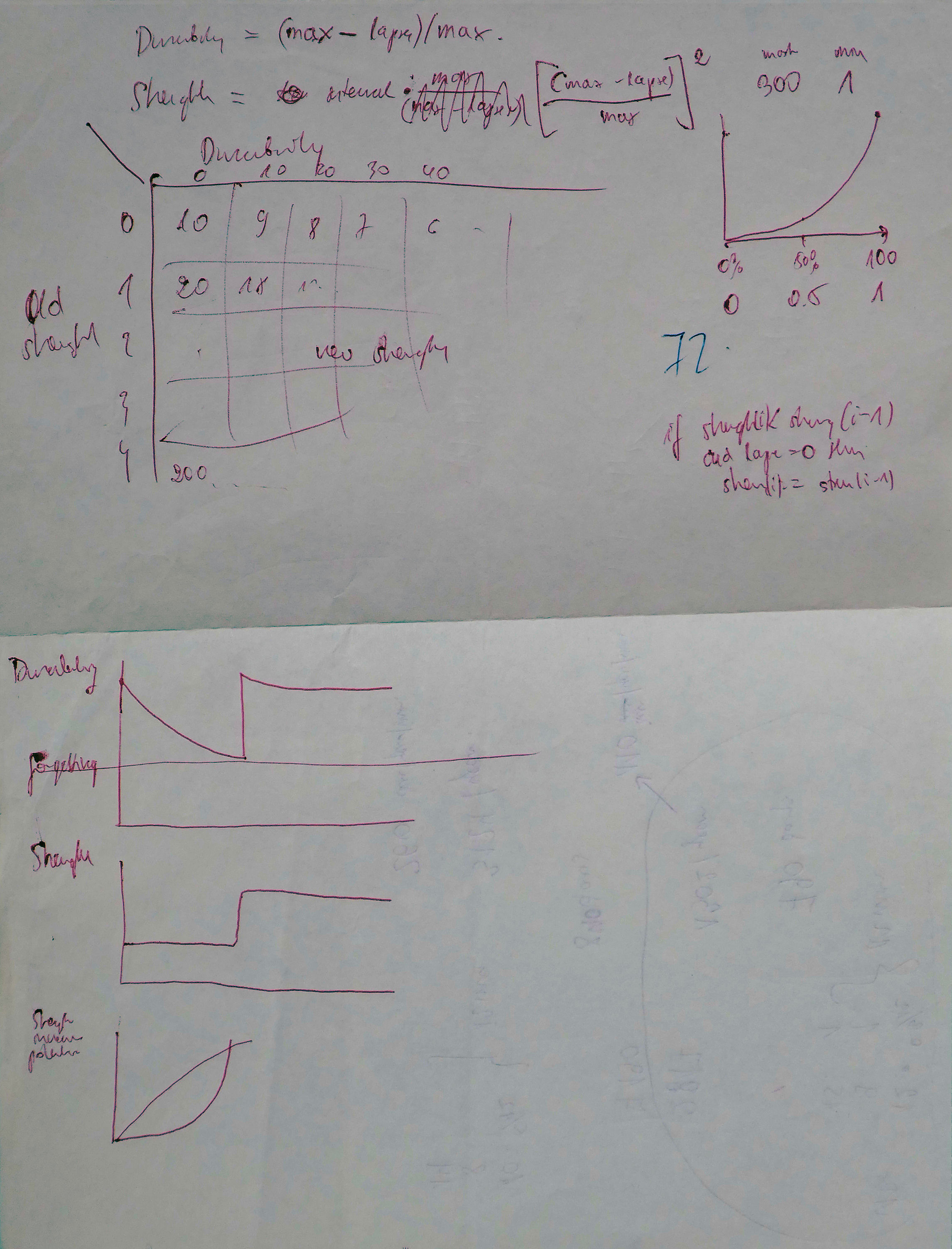

- Repeat whole pages using the following intervals (in days):I(1)=1 dayI(2)=7 daysI(3)=16 daysI(4)=35 daysfor i>4: I(i):=I(i-1)*2where:

- I(i) is the interval used after the i-th repetition

- Copy all items forgotten after the 35th day interval into newly created pages (without removing them from previously used pages). Those new pages will be repeated in the same way as pages with items learned for the first time

Note, that inter-repetition intervals after the fifth repetition were assumed to increase twice in subsequent repetitions. This fact was based on an intuition rather than on experiment. In two years of using the Algorithm SM-0 sufficient data were collected to confirm a reasonable accuracy of this assumption.

To this day I hear some people use or even prefer the paper version of SuperMemo. Here is a description from 1992.

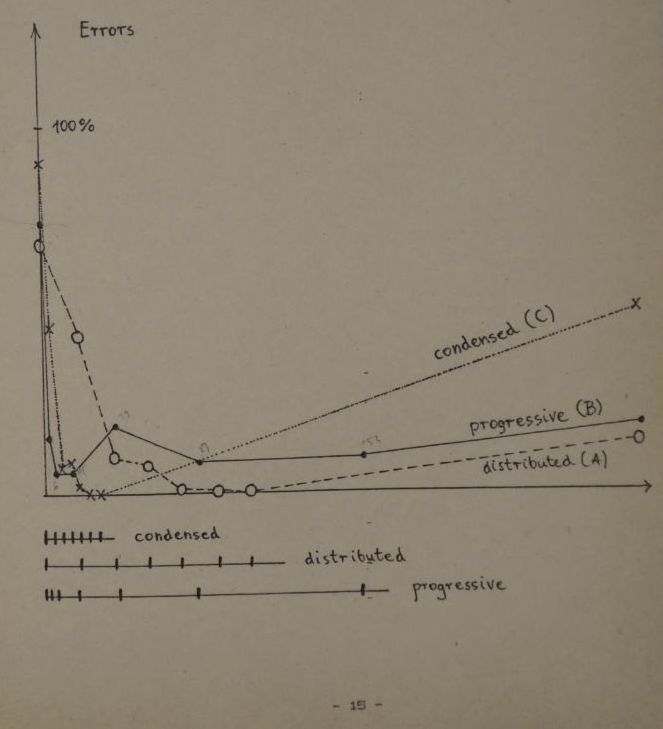

Note that the intuition that intervals should increase twice is as old as the theory of learning. In 1932, C. A. Mace hinted on the efficient learning methods in his book ” The psychology of study“. He mentioned ” active rehearsal” and ” repetitive revisions” that should be spaced in gradually increasing intervals, roughly ” intervals of one day, two days, four days, eight days, and so on“. This proposition was later taken on by other authors. Those included Paul Pimsleur and Tony Buzan who both proposed their own intuitions that involved very short intervals (in minutes) or “final repetition” (after a few months). All those ideas did not permeate well into the practice of study beyond the learning elites. Only a computer application made it possible to start learning effectively without studying the methodology.

That intuitive interval multiplication factor of 2 has also shown up in the context of studying the possibility of evolutionary optimization of memory in response to statistic properties of the environment: ” Memory is optimized to meet probabilistic properties of the environment “

Despite all its simplicity, in my Master’s Thesis, I did not hesitate to call my new method “revolutionary”:

Archive warning: Why use literal archives?

This text is part of: ” Optimization of learning ” by Piotr Wozniak (1990)

Although the acquisition rate may not have seemed staggering, the Algorithm SM-0 was revolutionary in comparison to my previous methods because of two reasons:

- with the lapse of time, knowledge retention increased instead of decreasing (as it was the case with intermittent learning)

- in a long term perspective, the acquisition rate remained almost unchanged (with intermittent learning, the acquisition rate would decline substantially over time)

[…]

For the first time, I was able to reconcile high knowledge retention with infrequent repetitions that in consequence led to steadily increasing volume of knowledge remembered without the necessity to increase the timeload!

Retention of 80% was easily achieved, and could even be increased by shortening the inter-repetition intervals. This, however, would involve more frequent repetitions and, consequently, increase the timeload. The assumed repetition spacing provided a satisfactory compromise between retention and workload.

[…]The next significant improvement of the Algorithm SM-0 was to come only in 1987 after the application of a computer to supervise the learning process. In the meantime, I accumulated about 7190 and 2817 items in my new English and biological databases respectively. With the estimated working time of 12 minutes a day for each database, the average knowledge acquisition rate amounted to 260 and 110 items/year/minute respectively, while knowledge retention amounted to 80% at worst.

Birth of SuperMemo from a decade’s perspective

A decade after the birth of SuperMemo, it became pretty well-known in Poland. Here is the same story as retold by J. Kowalski, Enter in 1994:

It was 1982, when a 20-year-old student of molecular biology at Adam Mickiewicz University of Poznan, Piotr Wozniak, became quite frustrated with his inability to retain newly learned knowledge in his brain. This referred to the vast material of biochemistry, physiology, chemistry, and English, which one should master wishing to embark on a successful career in molecular biology. One of the major incentives to tackle the problem of forgetting in a more systematic way was a simple calculation made by Wozniak which showed him that by continuing his work on mastering English using his standard methods, he would need 120 years to acquire all the important vocabulary. This not only prompted Wozniak to work on methods of learning, but also, turned him into a determined advocate of the idea of one language for all people (bearing in mind the time and money spent by the mankind on translation and learning languages). Initially, Wozniak kept increasing piles of notes with facts and figures he would like to remember. It did not take long to discover that forgetting requires frequent repetitions and a systematic approach is needed to manage all the newly collected and memorized knowledge. Using an obvious intuition, Wozniak attempted to measure the retention of knowledge after different inter-repetition intervals, and in 1985 formulated the first outline of SuperMemo, which did not yet require a computer. By 1987, Wozniak, then a sophomore of computer science, was quite amazed with the effectiveness of his method and decided to implement it as a simple computer program. The effectiveness of the program appeared to go far beyond what he had expected. This triggered an exciting scientific exchange between Wozniak and his colleagues at Poznan University of Technology and Adam Mickiewicz University. A dozen of students at his department took on the role of guinea pigs and memorized thousands of items providing a constant flow of data and critical feedback. Dr Gorzelańczyk from Medical Academy was helpful in formulating the molecular model of memory formation and modeling the phenomena occurring in the synapse. Dr Makałowski from the Department of Biopolymer Biochemistry contributed to the analysis of evolutionary aspects of optimization of memory (NB: he was also the one who suggested registering SuperMemo for Software for Europe). Janusz Murakowski, MSc in physics, currently enrolled in a doctoral program at the University of Delaware, helped Wozniak solve mathematical problems related to the model of intermittent learning and simulation of ionic currents during the transmission of action potential in nerve cells. A dozen of forthcoming academic teachers, with Prof. Zbigniew Kierzkowski in forefront, helped Wozniak tailor his program of study to one goal: combining all aspects of SuperMemo in one cohesive theory that would encompass molecular, evolutionary, behavioral, psychological, and even societal aspects of SuperMemo. Wozniak who claims to have discovered at least several important and never-published properties of memory, intended to solidify his theories by getting a PhD in neuroscience in the US. Many hours of discussions with Krzysztof Biedalak, MSc in computer science, made them both choose another way: try to fulfill the vision of getting with SuperMemo to students around the world.

1986: First steps of SuperMemo

SuperMemo on paper

On Feb 22, 1984, I computed that at my learning rate and my investment in learning, it would take me 26 years to master English (in SuperMemo, Advanced English standard is 4 years at 40 min/day). With the arrival of SuperMemo on paper that statistic improved dramatically.

In summer 1985, using SuperMemo on paper, I started learning with great enthusiasm. For the first time ever, I knew that all investment in learning would pay. Nothing could slip through the cracks. This early enthusiasm makes me wonder why I did not share my good news with others.

SuperMemo wasn’t a “secret weapon” that many users employ to impress others. I just thought that science must have answered all questions related to efficient learning. My impression was that I only patched my own poor access to western literature with a bit of own investigation. My naivete of the time was astronomical. My English wasn’t good enough to understand news from the west. America was for me a land of super-humans who do super-science, land on the moon, do all major discoveries and will soon cure cancer and become immortal. At the same time, it was a land of Reagan who could blast Poland off the surface of the Earth with Pershing or cruise missiles. That gave me a couple of nightmares. Perhaps the only major source of stress in the early 1980s. I often ponder amazing inconsistencies in the brains of toddlers or kids. To me, the naivete of my early twenties tells me I must have been a late bloomer with very uneven development. Ignorance of English translated to the ignorance of the world. I was a young adult with areas of strength and areas of incredible ignorance. In that context, spaced repetition looks like a child of a need combined with ignorance, self-confidence, and passion.

University

In October 1985, I started my years at a computer science university. I lost my passion for the university in the first week of learning. Instead of programming, we were subject to excruciatingly boring lectures of introductory topics in math, physics, electronics, etc. With a busy schedule, I might have easily become a SuperMemo dropout. Luckily, my love for biochemistry and my need for English would not let me slow down. I continued my repetitions, adding new pages from time to time. Most of all, I had a new dream: to have my own computer and do some programming on my own. One of the first things I wanted to implement was SuperMemo. I would keep my pages on the computer and have them scheduled automatically.

I casually mentioned my super-learning method to my high school friend Andrzej “Mike” Kubiak only in summer 1987 (Aug 29). We played football and music together. I finally showed him how to use SuperMemo on Nov 14, 1987. It took 836 days (2 years 3 months and 2 weeks) for me to recruit the first user of SuperMemo. Mike was later my guinea pig in trying out SuperMemo in procedural learning. He kept practicing computer-generated rhythms using a SuperMemo-like schedule. For Mike, SuperMemo was a love at first sight. His vocabulary rocketed. He remained faithful for many years up to a point when the quality of his English outstripped the need for further learning. He is a yogi and his trip to India and regular use of English have consolidated the necessary knowledge for life.

ZX Spectrum

In 1986 and 1987, I kept thinking about SuperMemo on a computer more and more often. Strangely, initially, I did not think much about the problem of separating pages into individual flashcards. This illustrates how close-minded we can be when falling into a routine of doing the same things daily. To get to the status of 2018, SuperMemo had to undergo dozens of breakthroughs and similarly obvious microsteps. It is all so simple and obvious in hindsight. However, there are hidden limits of human thinking that prevented incremental reading from emerging a decade earlier. Only a fraction of those limits is in technology.

In my first year of university I had very little time and energy to spare, and most of that time I invested in getting my first computer: ZX Spectrum (Jan 1986). I borrowed one from a friend for a day in Fall 1985 and was totally floored. I started programming “on paper” long before I got the toy. My first program was “planning the day” precursor of Plan. The program was ready to type in into the computer when I turned on my ZX Spectrum for the first time on Jan 4, 1986. As of that day, I spent most of my days on programming, ignoring school and writing my programs on paper even during classes.

Figure: ZX Spectrum 8-bit personal home computer.

The Army

Early 1986 was marred by the threat of conscription. I thought 5 more years of university meant 5 more years of freedom. However, The Army had different ideas. For them, second major did not count, and I had to bend over backwards to avoid the service. My anger was tripled by the fact that I would never ever contemplate 12 months of separation from my best new friend: ZX Spectrum. I told the man in uniform that they really do not want to have an angry man with a gun in their ranks. Luckily, in the mess of the communist bureaucracy, I managed to slip the net and continue my education. To this day, I am particularly sensitive to issues of freedom. Conscription isn’t much different from slavery. It was not a conscription in the name of combating fascism. It was a conscription for mindless drilling, goosestep, early alarms, hot meals in a hurry and stress. If this was to serve the readiness of Communist Bloc, this would be a readiness of Good Soldier Švejk Army. Today, millions of kids are sent to school in a similar conscription-like effort verging on slavery. Please read my ” I would never send my kids to school“ for my take on the coercive trample of the human rights of children. I am sure that some of my sentiments have been shaped by the sense of enslavement from 1986.

On the day when the radioactive cloud from Chernobyl passed over Poznan, Poland, I was busy walking point to point across the vast city visiting military and civilian offices in my effort to avoid the army. I succeeded and summer 1986 was one of the sunniest ever. I spent my days on programming, jogging, learning with SuperMemo (on paper), swimming, football and more programming.

Summer 1986

My appetite for new software was insatiable. I wrote a program for musical composition, for predicting the outcomes of the World Cup, for tic-tac-toe in 3D, for writing school tests, and many more. I got a few jobs from the Department of Biochemistry (Adam Mickiewicz University). My hero, Prof. Augustyniak, needed software for simulating the melting of DNA, and for fast search of tRNA genes (years later that led to a publication). He also commissioned a program for regression analysis that later inspired progress in SuperMemo (esp. Algorithms SM-6 and SM-8).

While programming, I had SuperMemo at the back of my mind all the time, however, all my software was characterized by the absence of any database. The programs had to be read from a cassette tape which was a major drag (it did not bother me back in 1986). It was simpler to keep my SuperMemo knowledge on paper. I started dreaming of a bigger computer. However, in Communist Poland, the cost was out of reach. Once I computed that an IBM PC would cost as much as my mom’s lifetime wages in the communist system. As late as in 1989, I could not afford a visit in a toilet in Holland because it was so astronomically expensive when compared with wages in Poland.

PC 1512

My whole family pulled in resources. My cousin, Dr Garbatowski, arranged a special foreign currency account for Deutsch Mark transfers. By a miracle, I was able to afford DM 1000 Amstrad PC 1512 from Germany. The computer was not smuggled as it was once reported in the press. My failed smuggling effort came two years earlier in reference to ZX Spectrum. My friends from Zaire were to buy it for me in West Berlin. In the end, I bought second-hand ZX Spectrum in Poland, at a good price, from someone who thought he was selling “just a keyboard”.

Figure: Amstrad PC-1512 DD. My version had only one diskette drive. Operating system MS-DOS had to be loaded from one diskette, Turbo Pascal 3.0 from another diskette, SuperMemo from yet another. By the time I had my first hard drive in 1991, my English collection was split into 3000-item pieces over 13 diskettes. I had many more for other areas of knowledge. On Jan 21, 1997, SuperMemo World has tracked down that original PC and bought it back from its owner: Jarek Kantecki. The PC was fully functional for the whole decade. It is now buried somewhere in dusty archives of the company. Perhaps we will publish its picture at some point. The presented picture comes from Wikipedia

My German Amstrad-Schneider PC 1512 was ordered from a Polish company Olech. Olech was to deliver it in June 1987. They did it in September. This cost me the whole summer of stress. Some time later, Krzysztof Biedalak ordered a PC from a Dutch company Colgar and never got a PC or money back. If this happened to me, I would have lost my trust in humanity. This would have killed SuperMemo. This might have killed my passion for computers. Biedalak, on the other hand, stoically got back to hard work and earned his money back and more. That would be one of the key personality differences between me and Biedalak. Stress resilience should be one of the components of development. I developed my stress resilience late with self-discipline training (e.g. winter swimming or marathons). Having lost his money, Biedalak did not complain. He got it back in no time. Soon I was envious of his new shiny PC. His hard work and determination in achieving goals was always a key to the company’s survival. It was his own privately earned money that helped SuperMemo World survive the first months. He did not get a gift from his parents. He could always do things on his own.

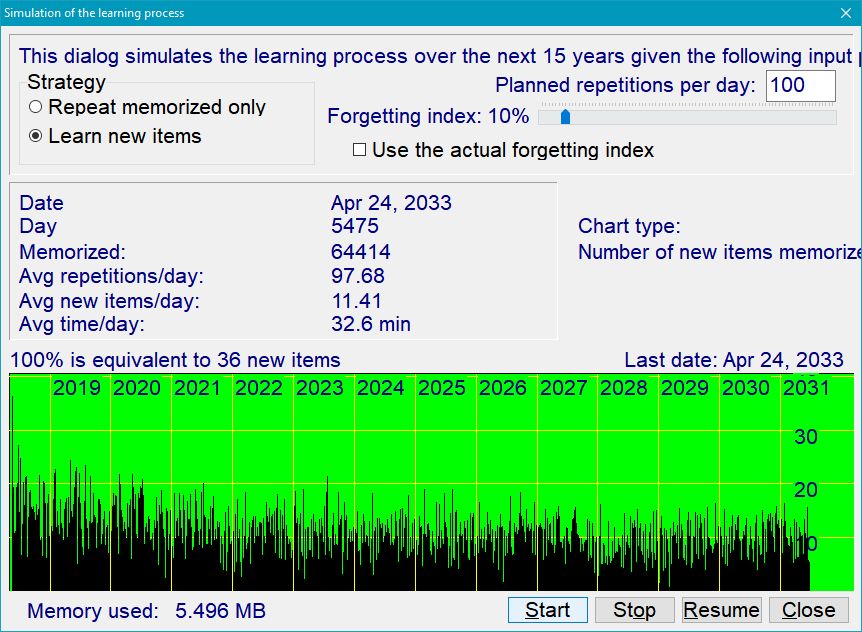

Simulating the learning process

On Feb 22, 1986, using my ZX Spectrum, I wrote a program to simulate long-term learning process with SuperMemo. I was worried that with the build-up of material, the learning process would slow down significantly. However, my preliminary results were pretty counterintuitive: the progress is almost linear. There isn’t much slow down in learning beyond the very initial period.

On Feb 25, 1986, I extended the simulation program by new functions that would answer ” burning questions about memory“. The program would run on Spectrum over 5 days until I could get full results for 80 years of learning. It confirmed my original findings.

On Mar 23, 1986, I managed to write the same simulation program in Pascal which was a compiled language. This time, I could run 80 years simulation in just 70 minutes. I got the same results. Today, SuperMemo still makes it possible to run similar simulations. The same procedure takes just a second or two.

Figure: SuperMemo makes it possible to simulate the course of learning over 15 years using real data collected during repetitions.

Some of the results of that simulation are valid today. Below I present some of the original findings. Some might have been amended in 1990 or 1994.

Learning curve is almost linear

The learning curve obtained by using the model, except for the very initial period, is almost linear.

Figure: Learning curve for a generic material, forgetting index equal to 10%, and daily working time of 1 minute.

New items take 5% of the time

In a long-term process, for the forgetting index equal to 10%, and for a fixed daily working time, the average time spent on memorizing new items is only 5% of the total time spent on repetitions. This value is almost independent of the size of the learning material.

Speed of learning

According to the simulation, the number of items memorized in consecutive years when working one minute per day can be approximated with the following equation:

NewItems=aar*(3*e -0.3*year+1)

where:

- NewItems – items memorized in consecutive years when working one minute per day,

- year – ordinal number of the year,

- aar – asymptotic acquisition rate, i.e. the minimum learning rate reached after many years of repetitions (usually about 200 items/year/min)

In a long-term process, for the forgetting index equal to 10%, the average rate of learning for generic material can be approximated to 200-300 items/year/min, i.e. one minute of learning per day results in the acquisition of 200-300 items per year. Users of SuperMemo usually report the average rate of learning from 50-2000 items/year/min.

Workload

For a generic material and the forgetting index of about 10%, the function of time required daily for repetitions per item can roughly be approximated using the formula:

time=1/500*year -1.5+1/30000

where:

- time – average daily time spent for repetitions per item in a given year (in minutes),

- year – year of the process.

As the time necessary for repetitions of a single item is almost independent of the total size of the learned material, the above formula may be used to approximate the workload for learning material of any size. For example, the total workload for a 3000-element collection in the first year will be 3000/500*1+3000/30000=6.1 (min/day).

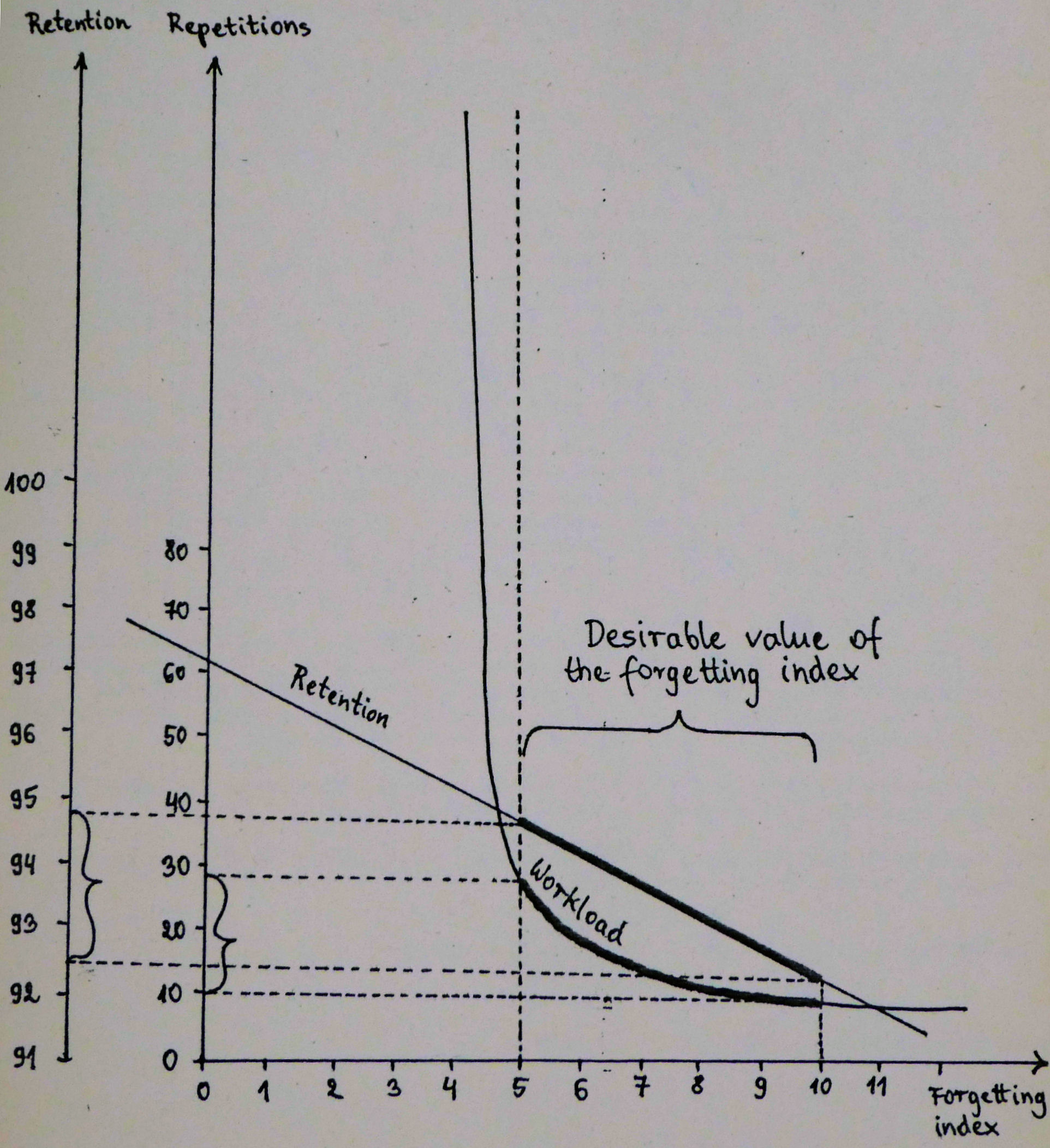

Optimum forgetting index

Figure: Workload, in minutes per day, in a generic 3000-item learning material, for the forgetting index equal to 10%.

The greatest overall knowledge acquisition rate is obtained for the forgetting index of about 20-30%. This results from the trade-off between reducing the repetition workload and increasing the relearning workload as the forgetting index progresses upward. In other words, high values of the forgetting index result in longer intervals, but the gain is offset by an additional workload coming from a greater number of forgotten items that have to be relearned.

For the forgetting index greater than 20%, the positive effect of long intervals on memory resulting from the spacing effect is offset by the increasing number of forgotten items.

Figure: Dependence of the knowledge acquisition rate on the forgetting index.

When the forgetting index drops below 5%, the repetition workload increases rapidly (see the figure above). The recommended value of the forgetting index used in the practice of learning is 6-14%.

Figure: Trade-off between the knowledge retention ( forgetting index) and the workload (number of repetitions of an average item in 10,000 days).

In later years, the value of the optimum forgetting index was found to differ depending on the tools used (e.g. Algorithm SM-17).

Memory capacity

The maximum lifetime capacity of the human brain to acquire new knowledge by means of learning procedures based on the discussed model can be estimated as no more than several million items. As nobody is likely to spend all his life on learning, I doubt I will ever see anyone with a million items in his memory.

1987: SuperMemo 1.0 for DOS

SuperMemo 1.0 for DOS: day by day (1987)

SuperMemo history file says ” Wozniak wrote his first SuperMemo in 16 evenings“. The reality was slightly more complex and I thought I would describe it in more details using the notes of the day.

I cannot figure out what I meant writing on Jul 3, 1987 that ” I have an idea of a revolutionary program arranging my work and scientific experiments SMTests” (SMTests stands for SuperMemo on paper). A transition from paper to a computer seems like an obvious step. There must have been some mental obstacle on the way that required “thinking out of the box”. Unfortunately, I did not write down details. Today it only matters in that it illustrates how excruciatingly slow a seemingly obvious idea may creep into the mind.

On Sep 8, 1987, my first PC arrived from Germany (Amstrad PC 1512). My enthusiasm was unmatched! I could not sleep. I worked all night. The first program I planned to write was to be used for mathematical approximations. SuperMemo was second in the pipeline.

Figure: Amstrad PC-1512 DD. My version had only one diskette drive. Operating system MS-DOS had to be loaded from one diskette, Turbo Pascal 3.0 from another diskette, SuperMemo from yet another. By the time I had my first hard drive in 1991, my English collection was split into 3000-item pieces over 13 diskettes. I had many more for other areas of knowledge. On Jan 21, 1997, SuperMemo World has tracked down that original PC and bought it back from its owner: Jarek Kantecki. The PC was fully functional for the whole decade. It is now buried somewhere in dusty archives of the company. Perhaps we will publish its picture at some point. The presented picture comes from Wikipedia

Oct 16, 1987, Fri, in 12 hours I wrote my first SuperMemo in GW-Basic (719 minutes of non-stop programming). It was slow like a snail and buggy. I did not like it much. I did not start learning. Could this be the end of SuperMemo? Wrong choice of a programming language? Busy days at school kept me occupied with a million unimportant things. Typical school effect: learn nothing about everything. No time for creativity and your own learning. Luckily, all the time I used SuperMemo on paper. The idea of SuperMemo could not have died. It had to be automated sooner or later.

On Nov 14, 1987, Sat, SuperMemo on paper got its first user: Mike Kubiak. He was very enthusiastic. The fire kept burning. On Nov 18, I learned about Turbo Pascal. It did not work on my computer. In those days, if you had a wrong graphics card, you might struggle. Instead of Hercules, I had a text-mode monochrome (black-and-white) CGA. I managed to solve the problem by editing programs in the RPED text editor rather than in the Turbo Pascal environment. Later I got the right version for my display card. Incidentally, old SuperMemos show in colors. I was programming it in shades of gray and never knew how it really looked in the color mode.

Nov 21, 1987 was an important day. It was a Saturday. Days free from school are days of creativity. I hoped to get up at 9 am but I overslept by 72 minutes. This is bad for the plan, but this is usually good for the brain and performance. I started the day from SuperMemo on paper (reviewing English, human biology, computer science, etc.). Later in the day, I read my Amstrad PC manual, learned about Pascal and Prolog, spent some time thinking how human cortex might work, did some exercise, and in the late evening, in a slightly tired state of mind, in afterthought, decided to write SuperMemo for DOS. This would be my second attempt. However, this time I chose Turbo Pascal 3.0 and never regretted. To this day, as a direct consequence, SuperMemo 17 code is written in Pascal (Delphi).

For the record, the name SuperMemo was proposed much later. In those days, I called my program: SMTOP for Super-Memorization Test Optimization Program. In 1988, Tomasz Kuehn insisted we call it CALOM for Computer-Aided Learning Optimization Method.

Nov 22, 1987 was a mirror copy of Nov 21. I concluded that I know how cortex works and that one day it would be nice to build a computer using similar principles (check Jeff Hawkins‘s work). The fact that I returned to programming SuperMemo in the late evening, i.e. very bad time for creative work, seems to indicate that the passion has not kicked in yet.

Nov 23, 1987 looked identical. I am not sure why I did not have any school obligations on Monday, but this might have saved SuperMemo. On Nov 24, 1987, the excitement kicked in and I worked for 8 hours straight (in the evening again). The program had a simple menu and could add new items to the database.

Nov 25, 1987 was wasted: I had to go to school, I was tired and sleepy. We had excruciatingly boring classes in computer architecture, probably a decade behind the status quo in the west.

Nov 26 was free again and again I was able to catch up with SuperMemo work. The program grew to be 15,400 bytes huge. I concluded the program might be ” very usefull” (sic!).

On Nov 27, I added 3 more hours of work after school.

Nov 28 was Saturday and I could add 12 enthusiastic hours of non-stop programming. SuperMemo now looked like almost ready for use.

On Nov 29, Sunday, I voted for economic reforms and democratization in Poland. In the evening, I did not make much progress. I had to prepare an essay for my English class. The essay described the day when I experimented with alcohol one day in 1982. I was a teetotaller, but as a biologist, I concluded I need to know how alcohol affects the mind.

Nov 30 was wasted at school, but we had a nice walk home with Biedalak. We had a long conversation in English about our future. That future was mostly to be about science, probably in the US.

Dec 1-4 were wasted at school again. No time for programming. In a conversation with some Russian professor, I realized that I completely forgot Russian in short 6 years. I used to be proudly fluent! I had to channel my programming time into some boring software for designing electronic circuits. I had to do it to credit a class in electronics. I had a deal with the teacher that I would not attend, just write this piece of software. I did not learn anything and to this day I mourn the waste of time. If I was free, I could have invested this energy in SuperMemo.

Dec 5 was a Saturday. Free from school. Hurray! However, I had to start from wasting 4 hours on some “keycode procedure”. In those days, even decoding the key pressed might become a challenge. And then another hour wasted on changing some screen attributes. In addition, I added 6 hours for writing “item editor”. This way, I could conveniently edit items in SuperMemo. The effortless things you take for granted today: cursor left, cursor right, delete, up, new line, etc. needed a day of programming back then.

Dec 6 was a lovely Sunday. I spent 7 hours debugging SuperMemo, adding “final drill”, etc. The excitement kept growing. In a week, I might start using my new breakthrough speed-learning software.

On Monday, Dec 7, after school, I added a procedure for deleting items.

On Dec 8, while Reagan and Gorbachev signed their nuclear deal, I added a procedure for searching items and displaying some item statistics. SuperMemo “bloated” to 43,800 bytes.

Dec 9 was marred by school and programming for the electronics class.

On Dec 10, I celebrated power cuts at school. Instead of boring classes, I could do some extra programming.

On Dec 11, we had a lovely lecture with one of the biggest brains at school: Prof. Jan Węglarz. He insisted that he could do more in Poland than abroad. This was a powerful message. However, in 2018, his Wikipedia entry says that his two-phase method discovery was ignored, and later duplicated in the west because he opted for publishing in Polish. Węglarz created a formidable team of best operations research brains in Poznan indeed. If I did not sway in the direction of SuperMemo, I would sure come with a begging hat to look for an employment opportunity. In the evening, I added a procedure for inspecting the number of items to review each day (today’s Workload).

Dec 12 was a Saturday. I expanded SuperMemo by a pending queue editor, and seemed ready to start learning, however, …

… on Dec 13, I was hit by a bombshell: ” Out of memory“. I somehow managed to fix the problem by optimizing the code. The last option I needed to add was for the program to read the date. Yes. That was a big deal hack. Without it, I would need to type in the current date at the start of the work with the program. Finally, at long last, in the afternoon, on Dec 13, 1987, I was able to add my first items to my human biology collection: questions about the autonomic nervous system. By Dec 23, 1987, my combined paper and computer databases included 3795 questions on human biology (of which almost 10% already in SuperMemo). Sadly, I had to remove full repetition histories from SuperMemo on that day. There wasn’t enough space on 360K diskettes. Spaced repetition research would need to wait a few more years.

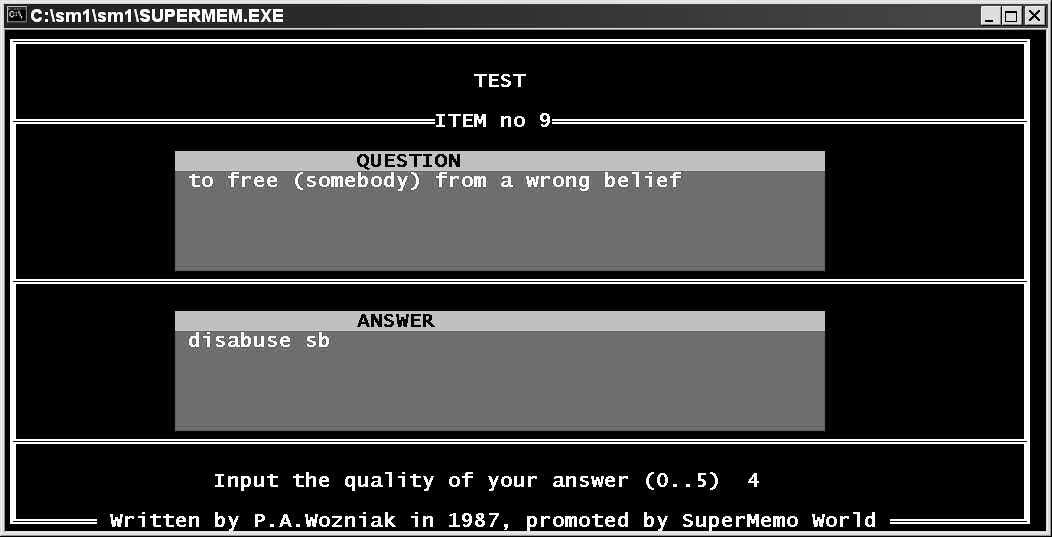

Figure: SuperMemo 1.0 for DOS (1987).

Algorithm SM-2

Here is the description of the algorithm used in SuperMemo 1.0. The description was taken from my Master’s Thesis written 2.5 years later (1990). SuperMemo 1.0 was soon replaced by a nicer SuperMemo 2.0 that I could give away to friends at university. There were insignificant updates to the algorithm that was named Algorithm SM-2 after the version of SuperMemo. This means there has never been Algorithm SM-1.

I mastered 1000 questions in biology in the first 8 months. Even better, I memorized exactly 10,000 items of English word pairs in the first 365 days working 40 min/day. This number was used as a benchmark in advertising SuperMemo in its first commercial days. Even today, 40 min is the daily investment recommended to master Advanced English in 4 years (40,000+ items).

To this day, Algorithm SM-2 remains popular and is still used by applications such as Anki, Mnemosyne and more.

Archive warning: Why use literal archives?

3.2. Application of a computer to improve the results obtained in working with the SuperMemo method

I wrote the first SuperMemo program in December 1987 (Turbo Pascal 3.0, IBM PC). It was intended to enhance the SuperMemo method in two basic ways:

- apply the optimization procedures to smallest possible items (in the paper-based SuperMemo items were grouped in pages),

- differentiate between the items on the base of their different difficulty.

Having observed that subsequent inter-repetition intervals are increasing by an approximately constant factor (e.g. two in the case of the SM-0 algorithm for English vocabulary), I decided to apply the following formula to calculate inter-repetition intervals:

I(1):=1

I(2):=6

for n>2 I(n):=I(n-1)*EF

where:

- I(n) – inter-repetition interval after the n-th repetition (in days)

- EF – easiness factor reflecting the easiness of memorizing and retaining a given item in memory (later called the E-Factor).

E-Factors were allowed to vary between 1.1 for the most difficult items and 2.5 for the easiest ones. At the moment of introducing an item into a SuperMemo database, its E-Factor was assumed to equal 2.5. In the course of repetitions, this value was gradually decreased in case of recall problems. Thus the greater problems an item caused in recall the more significant was the decrease of its E-Factor.

Shortly after the first SuperMemo program had been implemented, I noticed that E-Factors should not fall below the value of 1.3. Items having E-Factors lower than 1.3 were repeated annoyingly often and always seemed to have inherent flaws in their formulation (usually they did not conform to the minimum information principle). Thus not letting E-Factors fall below 1.3 substantially improved the throughput of the process and provided an indicator of items that should be reformulated. The formula used in calculating new E-Factors for items was constructed heuristically and did not change much in the following 3.5 years of using the computer-based SuperMemo method.

In order to calculate the new value of an E-Factor, the student has to assess the quality of his response to the question asked during the repetition of an item (my SuperMemo programs use the 0-5 grade scale – the range determined by the ergonomics of using the numeric key-pad). The general form of the formula used was:

EF’:=f(EF,q)

where:

- EF’ – new value of the E-Factor

- EF – old value of the E-Factor

- q – quality of the response

- f – function used in calculating EF’.

The function f had initially multiplicative character and was in later versions of SuperMemo program, when the interpretation of E-Factors changed substantially, converted into an additive one without significant alteration of dependencies between EF’, EF and q. To simplify further considerations only the function f in its latest shape is taken into account:

EF’:=EF-0.8+0.28*q-0.02*q*q

which is a reduced form of:

EF’:=EF+(0.1-(5-q)*(0.08+(5-q)*0.02))

Note, that for q=4 the E-Factor does not change.

Let us now consider the final form of the SM-2 algorithm that with minor changes was used in the SuperMemo programs, versions 1.0-3.0 between December 13, 1987 and March 9, 1989 (the name SM-2 was chosen because of the fact that SuperMemo 2.0 was by far the most popular version implementing this algorithm).

Algorithm SM-2 used in the computer-based variant of the SuperMemo method and involving the calculation of easiness factors for particular items:

- Split the knowledge into smallest possible items.

- With all items associate an E-Factor equal to 2.5.

- Repeat items using the following intervals:I(1):=1I(2):=6for n>2: I(n):=I(n-1)*EFwhere:

- I(n) – inter-repetition interval after the n-th repetition (in days),

- EF – E-Factor of a given item

- After each repetition assess the quality of repetition response in 0-5 grade scale:5 – perfect response4 – correct response after a hesitation3 – correct response recalled with serious difficulty2 – incorrect response; where the correct one seemed easy to recall1 – incorrect response; the correct one remembered0 – complete blackout.

- After each repetition modify the E-Factor of the recently repeated item according to the formula:EF’:=EF+(0.1-(5-q)*(0.08+(5-q)*0.02))where:

- EF’ – new value of the E-Factor,

- EF – old value of the E-Factor,

- q – quality of the response in the 0-5 grade scale.

- If the quality response was lower than 3 then start repetitions for the item from the beginning without changing the E-Factor (i.e. use intervals I(1), I(2) etc. as if the item was memorized anew).

- After each repetition session of a given day repeat again all items that scored below four in the quality assessment. Continue the repetitions until all of these items score at least four.

The optimization procedure used in finding E-Factors proved to be very effective. In SuperMemo programs you will always find an option for displaying the distribution of E-Factors (later called the E-Distribution). The shape of the E-Distribution in a given database was roughly established within few months since the outset of repetitions. This means that E-Factors did not change significantly after that period and it is safe to presume that E-Factors correspond roughly to the real factor by which the inter-repetition intervals should increase in successive repetitions.

During the first year of using the SM-2 algorithm (learning English vocabulary), I memorized 10,255 items. The time required for creating the database and for repetitions amounted to 41 minutes per day. This corresponds to the acquisition rate of 270 items/year/min. The overall retention was 89.3%, but after excluding the recently memorized items (intervals below 3 weeks) which do not exhibit properly determined E-Factors the retention amounted to 92%. Comparing the SM-0 and SM-2 algorithms one must consider the fact that in the former case the retention was artificially high because of hints the student is given while repeating items of a given page. Items preceding the one in question can easily suggest the correct answer.Therefore the SM-2 algorithm, though not stunning in terms of quantitative comparisons, marked the second major improvement of the SuperMemo method after the introduction of the concept of optimal intervals back in 1985. Separating items previously grouped in pages and introducing E-Factors were the two major components of the improved algorithm. Constructed by means of the trial-and-error approach, the SM-2 algorithm proved in practice the correctness of nearly all basic assumptions that led to its conception.

1988: Two component of memory

Two-component model of long-term memory lays at the foundation of SuperMemo, and is expressed explicitly in Algorithm SM-17. It differentiates between how stable knowledge is in long term memory storage, and how easy it is to retrieve. This remains a little known and quintessential fact of the theory of learning that one can be fluent and still remember poorly.

Fluency is a poor measure of learning in the long term

Components of long-term memory

I first described the idea of two components of memory in a paper for my computer simulations class on Jan 9, 1988. In the same paper, I concluded that different circuits must be involved in declarative and procedural learning.

If you pause for a minute, the whole idea of two components should be pretty obvious. If you take two items right after a review, one with a short optimum interval and the other with a long optimum interval, the memory status of the two must differ. Both can be recalled perfectly (maximum retrievability) and they also need to differ in how long they can last in memory (different stability). I was surprised I could not find any literature on the subject. However, if the literature has no mention of the existence of the optimum interval in spaced repetition, this seemingly obvious conclusion might be hiding behind another seemingly obvious idea: the progression of increasing interval in optimally spaced review. This is a lovely illustration how human progress is incremental and agonizingly slow. We are notoriously bad at thinking out of the box. The darkest place is under the candlestick. This weakness can be broken with an explosion of communication on the web. I advocate less peer review and more bold hypothesizing. I speak of a fantastic example coming from Robin Clarke’s paper in reference to Alzheimer’s. Strict peer review is reminiscent of Prussian schooling: in the quest for perfection, we lose our creativity, then humanity, and ultimately the pleasure of life.

When I first presented my ideas to my teacher Dr Katulski on Feb 19, 1988, he was not too impressed, but he gave me a pass for computer simulations credit. Incidentally, a while later, Katulski became one of the first users of SuperMemo 1.0 for DOS.

In my Master’s Thesis (1990), I added a slightly more formal proof of the existence of the two components. That part of my thesis remained unnoticed.

In 1994, J. Kowalski wrote in Enter, Poland:

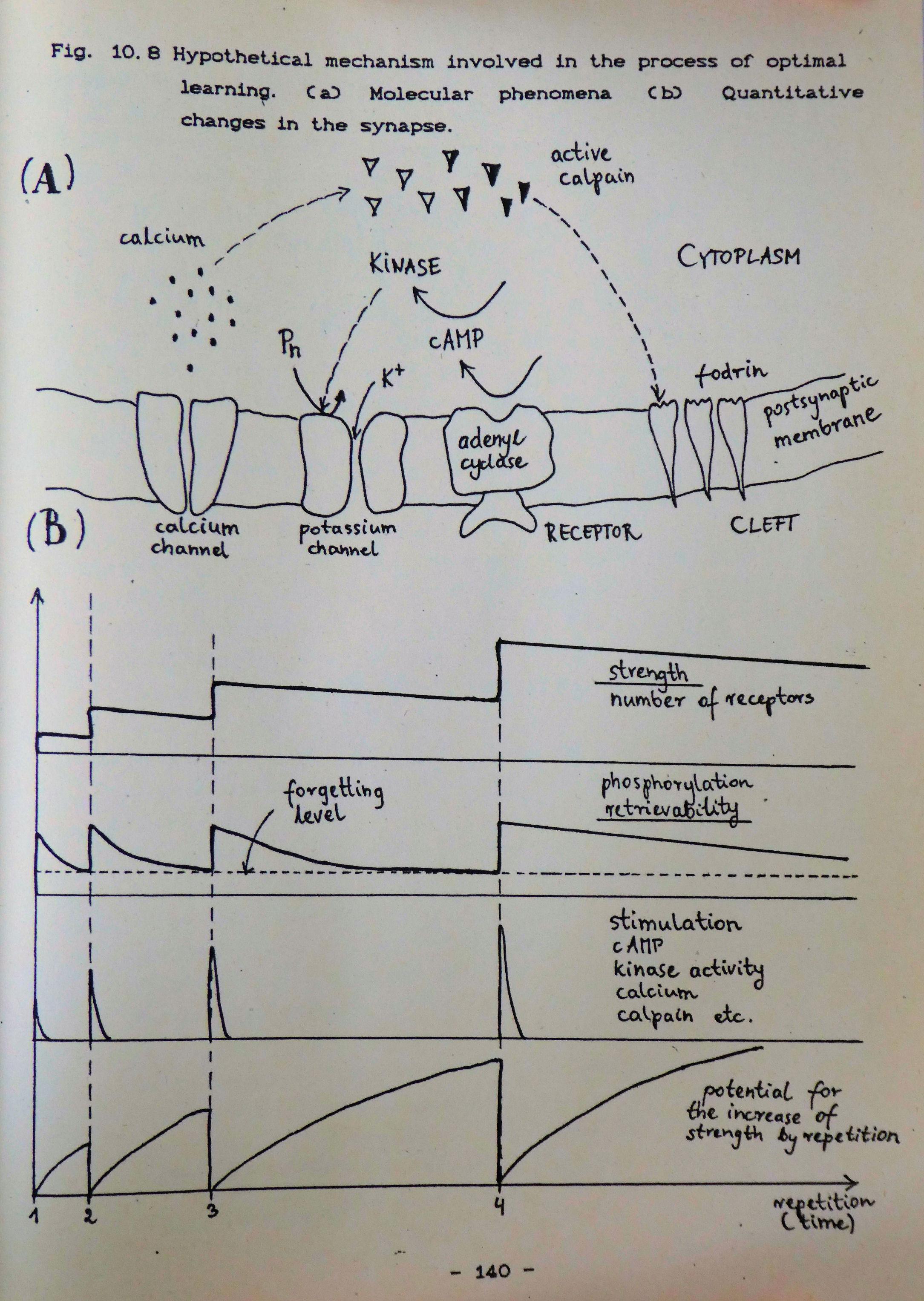

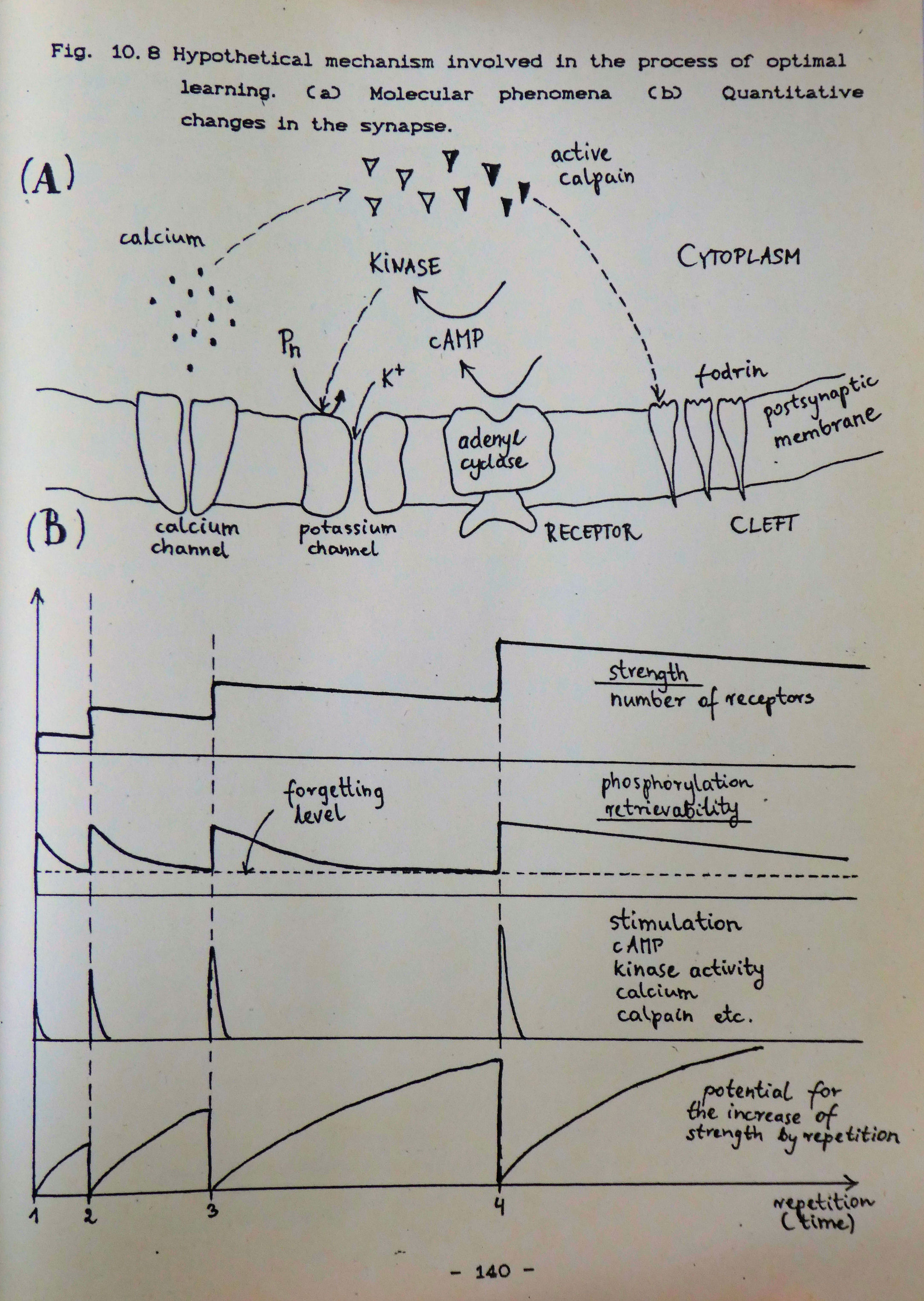

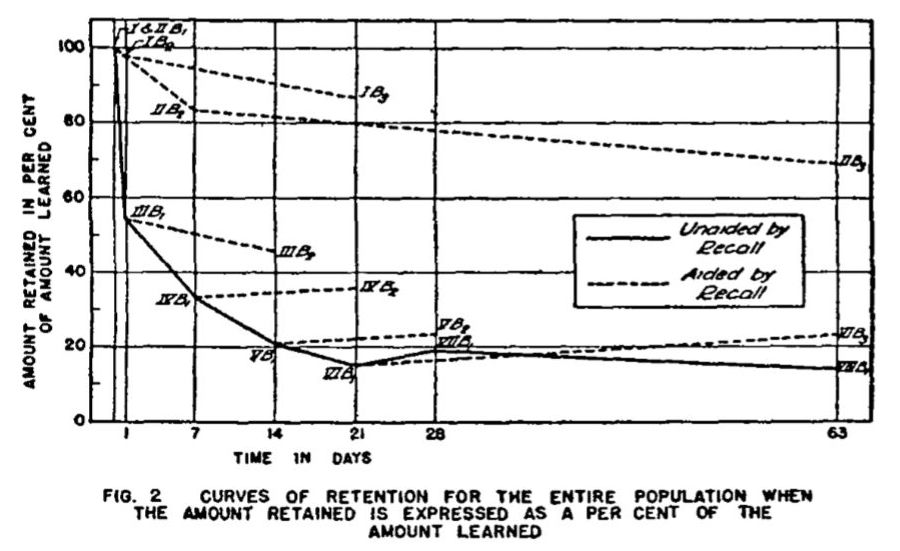

We got to the point where the evolutionary interpretation of memory indicates that it works using the principles of increasing intervals and the spacing effect. Is there any proof for this model of memory apart from the evolutionary speculation? In his Doctoral Dissertation, Wozniak discussed widely molecular aspects of memory and has presented a hypothetical model of changes occurring in the synapse in the process of learning. The novel element presented in the thesis was the distinction between the stability and retrievability of memory traces. This could not be used to support the validity of SuperMemo because of the simple fact that it was SuperMemo itself that laid the groundwork for the hypothesis. However, an increasing molecular evidence seems to coincide with the stability-retrievability model providing, at the same time, support for the correctness of assumptions leading to SuperMemo. In plain terms, retrievability is a property of memory which determines the level of efficiency with which synapses can fire in response to the stimulus, and thus elicit the learned action. The lower the retrievability the less you are likely to recall the correct response to a question. On the other hand, stability reflects the history of earlier repetitions and determines the extent of time in which memory traces can be sustained. The higher the stability of memory, the longer it will take for the retrievability to drop to the zero level, i.e. to the level where memories are permanently lost. According to Wozniak, when we learn something for the first time we experience a slight increase in the stability and retrievability in synapses involved in coding the particular stimulus-response association. In time, retrievability declines rapidly; the phenomenon equivalent to forgetting. At the same time, the stability of memory remains at the approximately same level. However, if we repeat the association before retrievability drops to zero, retrievability regains its initial value, while stability increases to a new level, substantially higher than at primary learning. Before the next repetition takes place, due to increased stability, retrievability decreases at a slower pace, and the inter-repetition interval might be much longer before forgetting takes place. Two other important properties of memory should also be noted: (1) repetitions have no power to increase the stability at times when retrievability is high (spacing effect), (2) upon forgetting, stability declines rapidly

We published our ideas with Drs Janusz Murakowski and Edward Gorzelańczyk in 1995. Murakowski perfected the mathematical proof. Gorzelańczyk fleshed out the molecular model. We have not heard much enthusiasm or feedback from the scientific community. The idea of two components of memory is like wine, the older it gets, the better it tastes. We keep wondering when it will receive a wider recognition. After all, we do not live in Mendel‘s time to keep a good gem hidden in some obscure archive. There are millions of users of spaced repetition and even if 0.1% got interested in the theory, they would hear of our two components. Today, even the newest algorithm in SuperMemo is based on the two-component model and it works like a charm. Ironically, users tend to flock to simpler solutions where all the mechanics of human memory remain hidden. Even at supermemo.com we make sure we do not scare customers with excess numbers on the screen.

The concept of the two components of memory has parallels to prior research, esp. by Bjork.

In the 1940s, scientists investigated habit strength and response strength as independent components of behavior in rats. Those concepts were later reformulated in Bjork’s disuse theory. Herbert Simon seems to have noticed the need for memory stability variable in his paper in 1966. In 1969, Robert Bjork formulated the Strength Paradox: a reverse relationship between the probability of recall and the memory effect of a review. Note that his is a restatement of the spacing effect in terms of the two component model, which is just a short step away from formulating the distinction between the variables of memory. This led to Bjork’s New Theory of Disuse (1992) that would distinguish between the storage strength and the retrieval strength. Those are close equivalents of retrievability and stability with a slightly different interpretation of the mechanisms that underlie the distinction. Most strikingly, Bjork believes that when retrievability drops to zero, stable memories are still retained (in our model, stability becomes indeterminate). At the cellular level, Bjork might be right, at least for a while, but practise of SuperMemo shows the power of complete forgetting, while, from the neural point of view, retaining memories in disuse would be highly inefficient independent of their stability. Last but not least, Bjork defines storage strength in terms of connectivity, which is very close to what I believe happens in good students: coherence affects stability.

Why aren’t two components of memory entering mainstream research yet? I claim that if human mind tends to be short-sighted, and we all are, by design, the mind of science can be truly strangulated by strenuous duties, publish or perish, battles for grants, hierarchies, conflict of interest, peer review, teaching obligations and even the code of conduct. Memory researchers tend to live in a single dimension of “memory strength”. In that dimension, they cannot truly appreciate true dynamics of molecular processes that need to be investigated to crack the problem. Ironically, progress may come from those who tend to work in artificial intelligence or neural network. Prodigious minds of Demis Hassabis or Andreas Knoblauch come up with twin ideas by independent reasoning process, models, and simulations. Biologists will need to listen to the language of mathematics or computer science.

Two component model in Algorithm SM-17

The two component model of long-term memory underlies Algorithm SM-17. The success of Algorithm SM-17 is the ultimate practical proof for the correctness of the model.

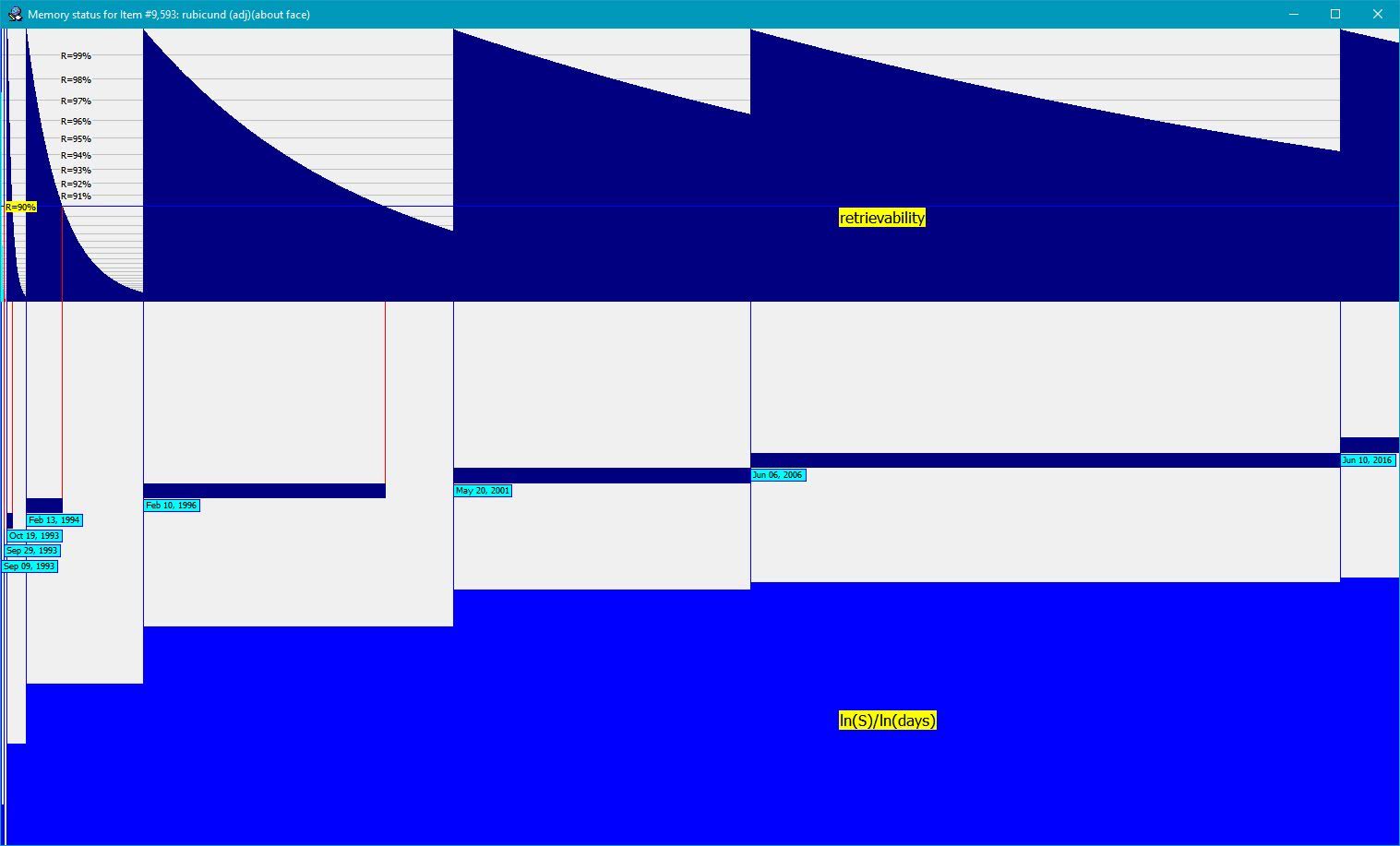

A graph of actual changes in the value of the two components of memory provides a conceptual visualization of the evolving memory status:

Figure: Changes in memory status over time for an exemplary item. The horizontal axis represents time spanning the entire repetition history. The top panel shows retrievability (tenth power, R^10, for easier analysis). Retrievability grid in gray is labelled by R=99%, R=98%, etc. The middle panel displays optimum intervals in navy. Repetition dates are marked by blue vertical lines and labelled in aqua. The end of the optimum interval where R crosses 90% line is marked by red vertical lines (only if intervals are longer than optimum intervals). The bottom panel visualizes stability (presented as

ln(S)/ln(days)for easier analysis). The graph shows that retrievability drops fast (exponentially) after early repetitions when stability is low, however, it only drops from 100% to 94% in long 10 years after the 7th review. All values are derived from an actual repetition history and the three component model of memory.

Due to the fact that real-life application of SuperMemo requires tackling learning material of varying difficulty, the third variable involved in the model is item difficulty (D). Some of the implications of item difficulty have also been discussed in the above article. In particular, the impact of composite memories with subcomponents of different memory stability (S).

For the purpose of the new algorithm we have defined the three components of memory as follows:

- Memory Stability (S) is defined as the inter-repetition interval that produces average recall probability of 0.9 at review time

- Memory Retrievability (R) is defined as the expected probability of recall at any time on the assumption of negatively exponential forgetting of homogenous learning material with the decay constant determined by memory stability (S)

- Item Difficulty (D) is defined as the maximum possible increase in memory stability (S) at review mapped linearly into 0..1 interval with 0 standing for easiest possible items, and 1 standing for highest difficulty in consideration in SuperMemo (the cut off limit currently stands at stability increase 6x less than the maximum possible)

Proof

The actual proof from my Master’s Thesis follows. For a better take on this proof, see Murakowski proof.

Archive warning: Why use literal archives?

10.4.2. Two variables of memory: stability and retrievability

There is an important conclusion that comes directly from the SuperMemo theory that there are two, and not one, as it is commonly believed, independent variables that describe the conductivity of a synapse and memory in general. To illustrate the case, let us again consider the calpain model of synaptic memory. It is obvious from the model, that its authors assume that only one independent variable is necessary to describe the conductivity of a synapse. Influx of calcium, activity of calpain, degradation of fodrin and number of glutamate receptors are all examples of such a variable. Note that all the mentioned parameters are dependent, i.e. knowing one of them we could calculate all others; obviously only in the case if we were able to construct the relevant formulae. The dependence of the parameters is a direct consequence of causal links between all of them.

However, the process of optimal learning requires exactly two independent variables to describe the state of a synapse at a given moment:

- A variable that plays the role of a clock that measures time between repetitions. Exemplary parameters that can be used here are:

- T e – time that has elapsed since the last repetition (it belongs to the range <0,optimal-interval>),

- T l – time that has to elapse before the next repetition will take place (T l=optimal-interval-T e),

- P f – probability that the synapse will lose the trace of memory during the day in question (it belongs to the range <0,1>).

- A variable that measures the durability of memory. Exemplary parameters that can be used here are:

- I(n+1) – optimal interval that should be used after the next repetition (I(n+1)=I(n)*C where C is a constant greater than three),

- I(n) – current optimal interval,

- n – number of repetitions preceding the moment in question, etc.

Let us now see if the above variables are necessary and sufficient to characterize the state of synapses in the process of time-optimal learning. To show that variables are independent, we will show that none of them can be calculated from the other. Let us notice that the I(n) parameter remains constant during a given inter-repetition interval, while the T e parameter changes from zero to I(n). This shows that there is no function f that satisfies the condition:

T e=f(I(n))

On the other hand, at the moment of subsequent repetitions, T e always equals zero while I(n) has always a different, increasing value. Therefore there is no function g that satisfies the condition:

I(n)=g(T e)

Hence independence of I(n) and T e.

To show that no other variables are necessary in the process of optimal learning, let us notice that at any given time we can compute all the moments of future repetitions using the following algorithm:

- Let there elapse I(n)-T e days.

- Let there be a repetition.

- Let T e be zero and I(n) increase C times.

- Go to 1.

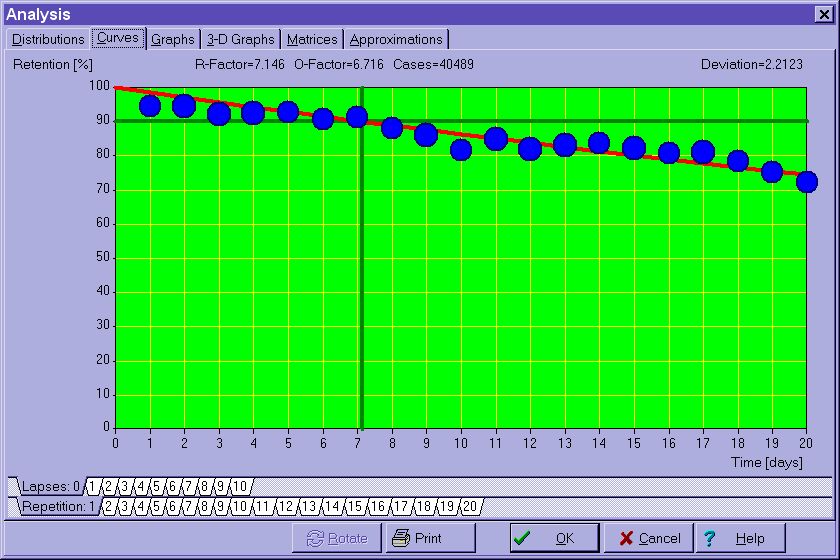

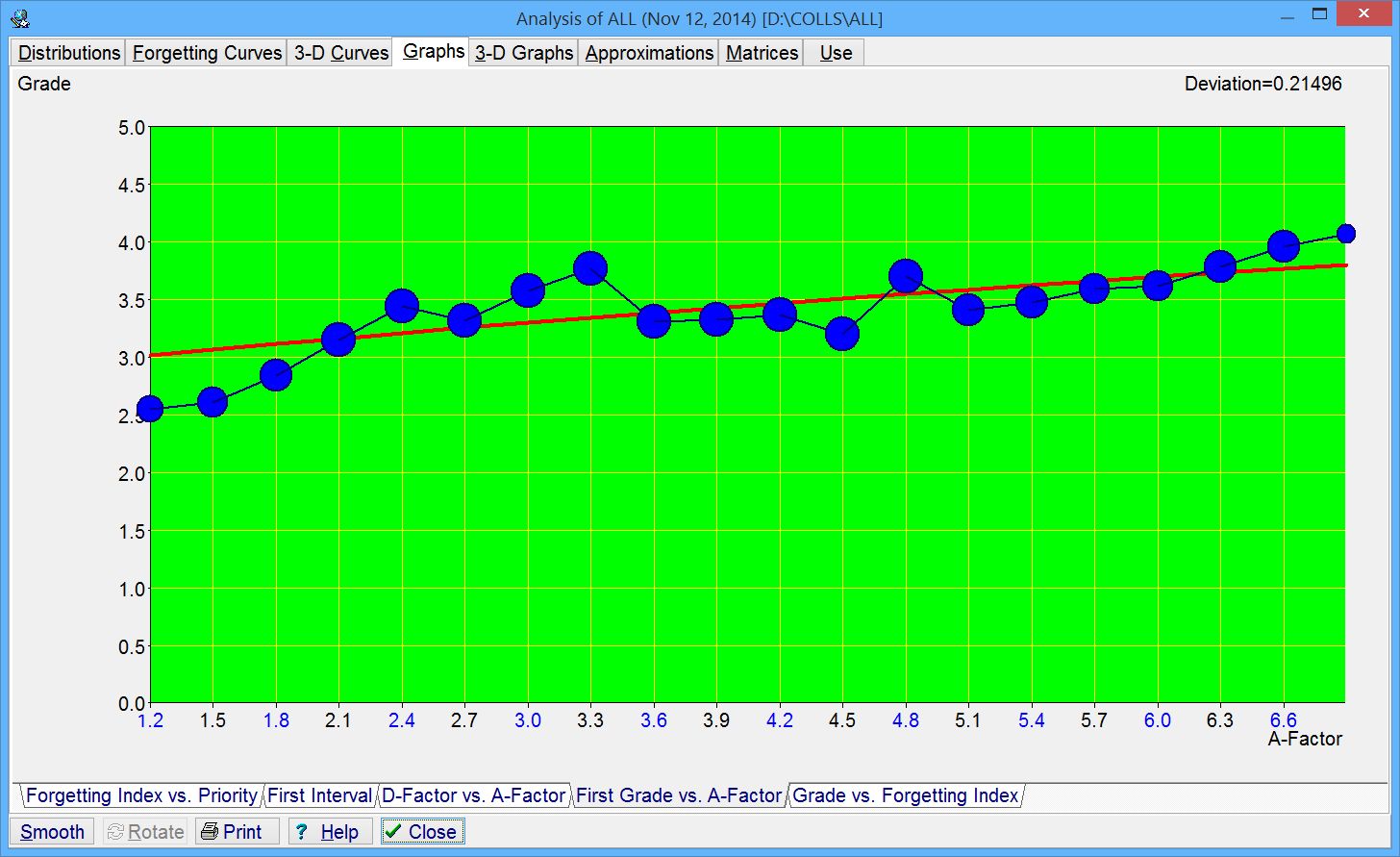

Note that the value of C is a constant characteristic for a given synapse and as such does not change in the process of learning. I will later use the term retrievability to refer to the first of the variables and the term stability to refer to the second one. To justify the choice of the first term, let me notice that we use to think that memories are strong after a learning task and that they fade away afterwards until they become no longer retrievable. This is retrievability that determines the moment at which memories are no longer there. It is also worth mentioning that retrievability was the variable that was tacitly assumed to be the only one needed to describe memory (as in the calpain model). The invisibility of the stability variable resulted from the fact that researchers concentrated their effort on a single learning task and observation of the follow-up changes in synapses, while the importance of stability can be visualized only in the process of repeating the same task many times. To conclude the analysis of memory variables, let us ask the standard question that must be posed in development of any biological model. What is the possible evolutionary advantage that arises from the existence of two variables of memory?